Difference between revisions of "OpenHPC"

m (→Various parts of the software stack are seeing an upgrade:: corrected a link) |

|||

| (15 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

__TOC__ | __TOC__ | ||

| − | = Introduction = | + | == Introduction == |

| − | Slurm upgrade | + | As part of the Viper upgrade, we are introducing a range of hardware and software updates to refresh Viper and enhance functionality. This upgrade is based on a migration from the existing Operating Systems and software stack to a new build based on up to date Operating System and the OpenHPC software stack. More features and improvements will come when the stack is rolled out onto the production service, but some initial key details, highlights and changes are detailed below. |

| + | |||

| + | === Familiar environment: === | ||

| + | * Generally the same familiar working environment based e.g. all slurm commands such as squeue will still work as normal | ||

| + | * The same home directory is in place, so all your files will be available | ||

| + | * A vast majority of workflows and job submission scripts should work without modification | ||

| + | |||

| + | === Key hardware updates: === | ||

| + | |||

| + | * Introduction of ten new compute nodes, each with 56 cores and 384GB of RAM. This will become part of a new queue of enhanced compute nodes available in addition to the standard compute nodes. | ||

| + | * New NVidia A40 GPU card in each of the GPU nodes. The upgraded GPU node offers more than double the single precision performance and the same GPU memory and number of CUDA cores in a single card as the existing GPU node did with 4x K40M cards in place, yet being a single card is a simpler platform to make use of all the resource. The new cards are also considerably more energy efficient. | ||

| + | |||

| + | === Various parts of the software stack are seeing an upgrade: === | ||

| + | |||

| + | * Operating System is being upgraded from CentOS 7.2 to CentOS 7.9. This is an important update to ensure maximum security and support but will also allow improved functionality (e.g. [[#Using Containers|Using Containers]]) | ||

| + | * Slurm is having a version upgrade to provide functionality, stability and security improvements | ||

| + | * While the existing Viper application stack will remain available, going forward a new application stack will supplement and eventually replace many of the applications, tools and libraries with new optimised and more up to date versions. | ||

| + | * Updated and improved cluster management stack, allowing us to provide more efficient and responsive updates and support. | ||

| + | |||

| + | == Connecting == | ||

| + | To connect to this system, just point your SSH connection to '''viperlogin.hpc.hull.ac.uk''' | ||

| + | |||

== Resource Requirements == | == Resource Requirements == | ||

| − | In order to reduce issues with tasks using more resource (CPU or RAM) than they should, Slurm will now | + | In order to reduce issues with tasks using more resource (CPU or RAM) than they should, Slurm will now use Linux cgroups to more closely monitor resource use of tasks and will limit (CPU) or terminate (RAM) tasks exceeding what they should use. In particular, this means it is important to request the correct amount of RAM needed for a task. |

{| class="wikitable" | {| class="wikitable" | ||

| − | |+ | + | |+Example memory request (standard compute) |

|- | |- | ||

|Default (no specific memory request) | |Default (no specific memory request) | ||

| Line 17: | Line 38: | ||

|128GB (i.e. 128GB for exclusive use) | |128GB (i.e. 128GB for exclusive use) | ||

|} | |} | ||

| − | |||

| − | |||

If a task is terminated due to exceeding the requested amount of memory, you should see a message in your Slurm error log file, such as: | If a task is terminated due to exceeding the requested amount of memory, you should see a message in your Slurm error log file, such as: | ||

| Line 25: | Line 44: | ||

slurmstepd: error: Detected 1 oom-kill event(s) in StepId=319.batch. Some of your processes may have been killed by the cgroup out-of-memory handler. | slurmstepd: error: Detected 1 oom-kill event(s) in StepId=319.batch. Some of your processes may have been killed by the cgroup out-of-memory handler. | ||

</pre> | </pre> | ||

| − | |||

== Job Emails == | == Job Emails == | ||

| Line 39: | Line 57: | ||

* ALL (equivalent to BEGIN, END, FAIL, INVALID_DEPEND, REQUEUE, and STAGE_OUT) | * ALL (equivalent to BEGIN, END, FAIL, INVALID_DEPEND, REQUEUE, and STAGE_OUT) | ||

* INVALID_DEPEND (dependency never satisfied) | * INVALID_DEPEND (dependency never satisfied) | ||

| − | * TIME_LIMIT, TIME_LIMIT_90 (reached 90 | + | * TIME_LIMIT, TIME_LIMIT_90 (reached 90 per cent of the time limit), TIME_LIMIT_80 (reached 80 per cent of the time limit), TIME_LIMIT_50 (reached 50 per cent of the time limit) * ARRAY_TASKS (sends emails for each array task otherwise job BEGIN, END and FAIL apply to a job array as a whole rather than generating individual email messages for each task in the job array). |

| − | The user to be notified is indicated with --mail-user, however only @hull.ac.uk email addresses are valid. | + | The user to be notified is indicated with --mail-user, however '''only @hull.ac.uk email addresses are valid.''' |

| − | If you want to be alerted when your task completes, | + | If you want to be alerted when your task completes, it is advised to use '''#SBATCH --mail-type=END,FAIL''' to catch if a job finishes cleanly or if it finishes due to an error. |

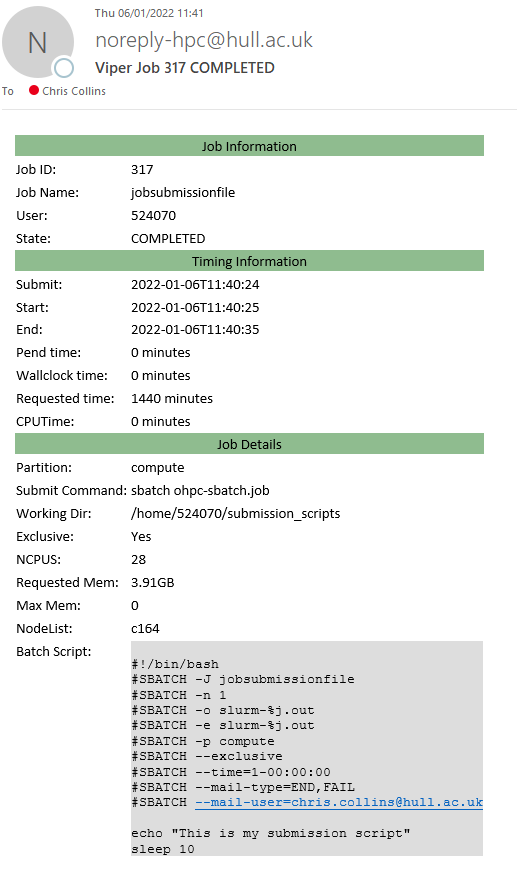

An example of a completion email is shown below: | An example of a completion email is shown below: | ||

| Line 71: | Line 89: | ||

== Using Containers == | == Using Containers == | ||

| + | Viper now supports the use of Containers, via Apptainer (previously called [https://www.linuxfoundation.org/press-release/new-linux-foundation-project-accelerates-collaboration-on-container-systems-between-enterprise-and-high-performance-computing-environments/ Singularity]). | ||

| + | |||

| + | Containers can be built to contain everything needed to run an application or code, independent of the Operating System. This means application/code, libraries, scripts or even data. This makes them ideal for reproducible science as experiments and data can be distributed as a single file. Generally, there is a negligible if any performance impact when running containers over bare metal. Containers are becoming an increasingly popular way of distributing applications and workflows. | ||

| + | |||

| + | Docker is perhaps the most well known form of containers, but Docker has security implications that mean it isn’t suitable for a shared user environment like HPC. Apptainer doesn't have the same security issues, indeed "''[Singularity] is optimized for compute focused enterprise and HPC workloads, allowing untrusted users to run untrusted containers in a trusted way''”. However, containers are built from a recipe files which include all the required components to support the workflow, and the recipe file can be based on an existing Docker container, allowing many existing Docker containers to be used with Singularity. | ||

| + | |||

| + | <pre style="background-color: black; color: white; border: 2px solid black; font-family: monospace, sans-serif;"> | ||

| + | #!/bin/bash | ||

| + | #SBATCH -J Sing-maxquant | ||

| + | #SBATCH -p gpu | ||

| + | #SBATCH -o %J.out | ||

| + | #SBATCH -e %J.err | ||

| + | #SBATCH --time=20:00:00 | ||

| + | #SBATCH --exclusive | ||

| + | |||

| + | module add test-modules singularity/3.5.3/gcc-8.2.0 | ||

| + | |||

| + | singularity exec /home/ViperAppsFiles/singularity/containers/maxquant.sif mono MaxQuant/bin/MaxQuantCmd.exe mqpar.xml | ||

| + | </pre> | ||

| + | |||

| + | {| class="wikitable" | ||

| + | |+Singularity/Apptainer command example | ||

| + | |- | ||

| + | | singularity | ||

| + | | Run Apptainer (Singularity) | ||

| + | |- | ||

| + | | exec | ||

| + | | Tell Apptainer (Singularity) to execute a command (rather than run a shell for example) | ||

| + | |- | ||

| + | | /home/ViperAppsFiles/singularity/containers/maxquant.sif | ||

| + | | Tell Apptainer (Singularity) the Container to use | ||

| + | |- | ||

| + | | mono MaxQuant/bin/MaxQuantCmd.exe mqpar.xml | ||

| + | | Run the command, in this case, the command is mono, with the dotnet application MaxQuantCmd.exe and with experiment configuration file mqpar.xml | ||

| + | |} | ||

| + | |||

| + | While most existing workflows are expected to work as usual or with minimal modifications following the upgrade from CentOS 7.2 to CentOS 7.9, we are aiming to provide an Apptainer container based on Viper's previous CentOS 7.2 compute node image, which should provide a platform for any workflows that do display issues. | ||

Latest revision as of 11:00, 10 November 2022

Contents

Introduction

As part of the Viper upgrade, we are introducing a range of hardware and software updates to refresh Viper and enhance functionality. This upgrade is based on a migration from the existing Operating Systems and software stack to a new build based on up to date Operating System and the OpenHPC software stack. More features and improvements will come when the stack is rolled out onto the production service, but some initial key details, highlights and changes are detailed below.

Familiar environment:

- Generally the same familiar working environment based e.g. all slurm commands such as squeue will still work as normal

- The same home directory is in place, so all your files will be available

- A vast majority of workflows and job submission scripts should work without modification

Key hardware updates:

- Introduction of ten new compute nodes, each with 56 cores and 384GB of RAM. This will become part of a new queue of enhanced compute nodes available in addition to the standard compute nodes.

- New NVidia A40 GPU card in each of the GPU nodes. The upgraded GPU node offers more than double the single precision performance and the same GPU memory and number of CUDA cores in a single card as the existing GPU node did with 4x K40M cards in place, yet being a single card is a simpler platform to make use of all the resource. The new cards are also considerably more energy efficient.

Various parts of the software stack are seeing an upgrade:

- Operating System is being upgraded from CentOS 7.2 to CentOS 7.9. This is an important update to ensure maximum security and support but will also allow improved functionality (e.g. Using Containers)

- Slurm is having a version upgrade to provide functionality, stability and security improvements

- While the existing Viper application stack will remain available, going forward a new application stack will supplement and eventually replace many of the applications, tools and libraries with new optimised and more up to date versions.

- Updated and improved cluster management stack, allowing us to provide more efficient and responsive updates and support.

Connecting

To connect to this system, just point your SSH connection to viperlogin.hpc.hull.ac.uk

Resource Requirements

In order to reduce issues with tasks using more resource (CPU or RAM) than they should, Slurm will now use Linux cgroups to more closely monitor resource use of tasks and will limit (CPU) or terminate (RAM) tasks exceeding what they should use. In particular, this means it is important to request the correct amount of RAM needed for a task.

| Default (no specific memory request) | approx 4GB (i.e. 128 GB RAM / 28 cores) |

| #SBATCH --mem=40G | 40GB (i.e. specific amount) |

| #SBATCH --exclusive | 128GB (i.e. 128GB for exclusive use) |

If a task is terminated due to exceeding the requested amount of memory, you should see a message in your Slurm error log file, such as:

slurmstepd: error: Detected 1 oom-kill event(s) in StepId=319.batch. Some of your processes may have been killed by the cgroup out-of-memory handler.

Job Emails

It is now possible to get emails alerts when certain event types occur using Slurms built in --mail-type SBATCH directive support.

The most commonly used valid type values are as follows (multiple type values may be specified in a comma separated list):

- NONE (the default if you don't set --mait-type

- BEGIN

- END

- FAIL

- REQUEUE

- ALL (equivalent to BEGIN, END, FAIL, INVALID_DEPEND, REQUEUE, and STAGE_OUT)

- INVALID_DEPEND (dependency never satisfied)

- TIME_LIMIT, TIME_LIMIT_90 (reached 90 per cent of the time limit), TIME_LIMIT_80 (reached 80 per cent of the time limit), TIME_LIMIT_50 (reached 50 per cent of the time limit) * ARRAY_TASKS (sends emails for each array task otherwise job BEGIN, END and FAIL apply to a job array as a whole rather than generating individual email messages for each task in the job array).

The user to be notified is indicated with --mail-user, however only @hull.ac.uk email addresses are valid.

If you want to be alerted when your task completes, it is advised to use #SBATCH --mail-type=END,FAIL to catch if a job finishes cleanly or if it finishes due to an error.

An example of a completion email is shown below:

Slurm Information

It is now possible to check the details of a job submission script used to submit a job. This is done using sacct -B -j <jobnumber> e.g.:

$ sacct -B -j 317 Batch Script for 317 -------------------------------------------------------------------------------- #!/bin/bash #SBATCH -J jobsubmissionfile #SBATCH -n 1 #SBATCH -o slurm-%j.out #SBATCH -e slurm-%j.out #SBATCH -p compute #SBATCH --exclusive #SBATCH --time=1-00:00:00 #SBATCH --mail-type=END,FAIL #SBATCH --mail-user=<your Hull email address> echo "This is my submission script" sleep 10

Using Containers

Viper now supports the use of Containers, via Apptainer (previously called Singularity).

Containers can be built to contain everything needed to run an application or code, independent of the Operating System. This means application/code, libraries, scripts or even data. This makes them ideal for reproducible science as experiments and data can be distributed as a single file. Generally, there is a negligible if any performance impact when running containers over bare metal. Containers are becoming an increasingly popular way of distributing applications and workflows.

Docker is perhaps the most well known form of containers, but Docker has security implications that mean it isn’t suitable for a shared user environment like HPC. Apptainer doesn't have the same security issues, indeed "[Singularity] is optimized for compute focused enterprise and HPC workloads, allowing untrusted users to run untrusted containers in a trusted way”. However, containers are built from a recipe files which include all the required components to support the workflow, and the recipe file can be based on an existing Docker container, allowing many existing Docker containers to be used with Singularity.

#!/bin/bash #SBATCH -J Sing-maxquant #SBATCH -p gpu #SBATCH -o %J.out #SBATCH -e %J.err #SBATCH --time=20:00:00 #SBATCH --exclusive module add test-modules singularity/3.5.3/gcc-8.2.0 singularity exec /home/ViperAppsFiles/singularity/containers/maxquant.sif mono MaxQuant/bin/MaxQuantCmd.exe mqpar.xml

| singularity | Run Apptainer (Singularity) |

| exec | Tell Apptainer (Singularity) to execute a command (rather than run a shell for example) |

| /home/ViperAppsFiles/singularity/containers/maxquant.sif | Tell Apptainer (Singularity) the Container to use |

| mono MaxQuant/bin/MaxQuantCmd.exe mqpar.xml | Run the command, in this case, the command is mono, with the dotnet application MaxQuantCmd.exe and with experiment configuration file mqpar.xml |

While most existing workflows are expected to work as usual or with minimal modifications following the upgrade from CentOS 7.2 to CentOS 7.9, we are aiming to provide an Apptainer container based on Viper's previous CentOS 7.2 compute node image, which should provide a platform for any workflows that do display issues.