Difference between revisions of "Programming/OpenMP"

m |

|||

| (52 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | == | + | == Introduction to OpenMP == |

| − | OpenMP is designed for multi-processor/core, shared memory machines. The underlying architecture can be shared memory UMA or NUMA. | + | OpenMP (Open Multi-Processing) is an application programming interface (API) that supports multi-platform shared memory multiprocessing programming in C, C++, and Fortran on most platforms including our own HPC. |

| + | |||

| + | The programming model for shared memory is based on the notion of threads: | ||

| + | |||

| + | * Threads are like processes, except that threads can share the memory with each other (as well as have private memory) | ||

| + | * Shared data can be accessed by all threads | ||

| + | * Private data can only be accessed by the owning thread | ||

| + | * Different threads can follow different flows of control through the same program | ||

| + | ** each thread has its own program counter | ||

| + | * Usually run one thread per CPU/core | ||

| + | ** but could be more | ||

| + | **can have hardware support for multiple threads per core | ||

| + | |||

| + | An example of how this is implemented in computer memory is shown below: | ||

| + | |||

| + | [[File:Openmp-01.jpg]] | ||

| + | |||

| + | |||

| + | == Programming API== | ||

| + | |||

| + | '''OpenMP''' is designed for multi-processor/core, shared memory machines. The underlying architecture can be shared memory UMA or NUMA. | ||

It is an Application Program Interface (API) that may be used to explicitly direct multi-threaded, shared memory parallelism. Comprised of three primary API components: | It is an Application Program Interface (API) that may be used to explicitly direct multi-threaded, shared memory parallelism. Comprised of three primary API components: | ||

| Line 17: | Line 37: | ||

* Synchronization of work among threads | * Synchronization of work among threads | ||

| + | |||

| + | ==Programming model== | ||

| + | |||

| + | Within the idea of the shared memory model, we use the idea of '''threads''' which can share the memory with all the other threads. These also have the following characteristics: | ||

| + | |||

| + | * Private data can only be accessed by the thread owning it | ||

| + | * Each thread can run simultaneously with other threads but also asynchronously, so we need to be careful of race conditions. | ||

| + | * Usually we have one thread per processing core, although there may be hardware support for more (e.g. hyper-threading) | ||

| + | |||

| + | ==Thread Synchronization== | ||

| + | |||

| + | As previously mention threads execute asynchronously, which means each thread proceeds through program instruction independently of other threads. | ||

| + | |||

| + | Although this makes for a very flexible system we must be very careful about the actions on shared variables that occur in the correct order. | ||

| + | |||

| + | * e.g. If we access a variable to read on thread 1 before thread 2 has written to it we will cause a program crash, likewise if updates to shared variables are accessed by different threads at the same time, one of the updates may get overwritten. | ||

| + | |||

| + | To prevent this happen we must either use variables that are independent of the different threads (ie different parts of an array) or perform some sort of synchronization within the code so different threads get to the same point at the same time. | ||

| + | |||

| + | ==First threaded program== | ||

| + | |||

| + | Creating the most basic C program would be like the following: | ||

| + | |||

| + | <pre> | ||

| + | #include<stdio.h> | ||

| + | int main() | ||

| + | { | ||

| + | printf(“ hello world\n”); | ||

| + | return 0; | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | To thread this we must tell the compiler which parts of the program to make into threads | ||

| + | |||

| + | <pre> | ||

| + | #include<omp.h> | ||

| + | #include<stdio.h> | ||

| + | int main() | ||

| + | { | ||

| + | #pragma omp parallel | ||

| + | { | ||

| + | printf(“hello "); | ||

| + | printf("world\n”); | ||

| + | } | ||

| + | return 0; | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | Let us look at the extra components to make this a parallel-threaded program | ||

| + | |||

| + | * We have an openMP include file '''(#include <omp.h>''') | ||

| + | * We use the '''#pragma omp parallel''' which tells the compiler the following region within the { } is the going to be executed as threads | ||

| + | |||

| + | To compile this we use the command: | ||

| + | |||

| + | $ gcc -fopenmp myprogram.c -o myprogram ( for the ''gcc compiler''), or | ||

| + | |||

| + | $ icc -fopenmp myprogram.c -o myprogram (for the ''Intel compiler'') | ||

| + | |||

| + | And when we run this we would get something like the following | ||

| + | |||

| + | <pre> | ||

| + | $ ./myprogram | ||

| + | hello hello world | ||

| + | world | ||

| + | hello hello world | ||

| + | world | ||

| + | </pre> | ||

| + | |||

| + | * Not very coherent but remember the threads all executed at different times, that is asynchronous and this is why we must be very careful about communicating between different threads of the same program. | ||

| + | |||

| + | ==Second threaded program== | ||

| + | |||

| + | Although the previous program is threaded it does not represent a real-world example | ||

| + | |||

| + | <pre> | ||

| + | #include<omp.h> | ||

| + | #include<stdio.h> | ||

| + | int main() | ||

| + | { | ||

| + | double A[1000]; | ||

| + | omp_set_num_threads(4); | ||

| + | #pragma omp parallel | ||

| + | { | ||

| + | int ID = omp_get_thread_num(); | ||

| + | pooh(ID, A); | ||

| + | } | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | Here each thread executes the same code independently, the only thing different is the omp thread ID is also passed to each call of pooh(ID,A). | ||

| + | |||

| + | All threads again wait for the end of the closing parallel region brace to finish before proceeding (i.e. a synchronization barrier). | ||

| + | |||

| + | In this program we always expect 4 threads to be given to us by the underlying operating system. Unfortunately, this may not happen and we will be allocated what the scheduler is prepared to offer. This could cause us serious program difficulties if we rely on a fixed number of threads every time. | ||

| + | |||

| + | We must call the openMP library (at runtime) how many threads we actually got, this is done with the following code: | ||

| + | |||

| + | <pre> | ||

| + | #include<omp.h> | ||

| + | #include<stdio.h> | ||

| + | int main() | ||

| + | { | ||

| + | double A[1000]; | ||

| + | omp_set_num_threads(4); | ||

| + | #pragma omp parallel | ||

| + | { | ||

| + | int ID = omp_get_thread_num(); | ||

| + | int nthrds = omp_get_num_threads(); | ||

| + | pooh(ID, A); | ||

| + | } | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | * Each thread calls pooh(ID,A) for ID = 0 to '''nthrds-1''' | ||

| + | * This program hard codes the number of threads requested to 4, this isn't a good way of programming. and would need re-compiling every time we changed this. A better way of doing this is to set the environment variable '''OMP_NUM_THREADS''', and remove the '''omp_set_num_threads(4)'''. | ||

| + | |||

| + | <pre> | ||

| + | $ export OMP_NUM_THREADS=4 | ||

| + | $ ./myprogram | ||

| + | </pre> | ||

| + | |||

| + | ==Parallel loops== | ||

| + | |||

| + | Loops are the main source of parallelism in many applications. If the iterations of a loop are independent (can be done in any order) then we can share out the iterations between different threads. OpenMP has a native call to do this efficiently: | ||

| + | |||

| + | |||

| + | <pre> | ||

| + | #pragma omp parallel | ||

| + | { | ||

| + | #pragma omp for | ||

| + | for (loop=0;loop<N;loop++) | ||

| + | { | ||

| + | do_threaded_task(loop); | ||

| + | } | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | This is a much neater way and allows the compiler to perform optimizations automatically (unless otherwise stated). The variable loop is made 'private' by each thread by default. Also, all threads have to wait at the end of the parallel loop before proceeding past the end of this region. | ||

| + | |||

| + | One OpenMP shortcut is to put the '''parallel pragma''' in the '''parallel for''' part, which just makes the code more readable. | ||

| + | |||

| + | <pre> | ||

| + | #define MAX = 100; | ||

| + | |||

| + | double data[MAX]; | ||

| + | int loop; | ||

| + | #pragma omp parallel for | ||

| + | for (loop=0;loop< MAX; loop++) | ||

| + | { | ||

| + | data[loop] = process_data(loop); | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | There is a side effect of threading is called '''False sharing''' which can cause poor scaling. If independent data elements happen to sit on the same cache line, each update will cause the cache lines to “slosh back and forth” between threads hence each time the variable has to be re-loaded from the main memory. | ||

| + | |||

| + | One solution is to pad arrays so elements are on distinct cache lines, another method is to allow single threads to operate on a cache line completely. A better way is to re-write the program so this effect is avoided, an example is given below: | ||

| + | |||

| + | <pre> | ||

| + | |||

| + | #include <omp.h> | ||

| + | static long num_steps = 100000; double step; | ||

| + | |||

| + | #define NUM_THREADS 2 | ||

| + | void main () | ||

| + | { | ||

| + | int nthreads; double pi=0.0; | ||

| + | step = 1.0/(double) num_steps; | ||

| + | omp_set_num_threads(NUM_THREADS); | ||

| + | |||

| + | #pragma omp parallel | ||

| + | { | ||

| + | int i, id, nthrds; double x, sum; | ||

| + | id = omp_get_thread_num(); | ||

| + | nthrds = omp_get_num_threads(); | ||

| + | |||

| + | if (id == 0) | ||

| + | nthreads = nthrds; | ||

| + | for (i=id, sum=0.0;i< num_steps; i=i+nthrds) | ||

| + | { | ||

| + | x = (i+0.5)*step; | ||

| + | sum += 4.0/(1.0+x*x); | ||

| + | } | ||

| + | #pragma omp critical | ||

| + | pi += sum * step; | ||

| + | } | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | * Here we create a scalar local to each thread to accumulate partial sums | ||

| + | * No array, no false sharing | ||

| + | * Within the parallel region we create a '''#pragma omp''' critical region to allow one thread at a time to enter to perform the summation of the result of pi. | ||

| + | |||

| + | This solution will scale much better than using an array-based design. Again because the above code of often seen in loops, openMP provides a reduction tool as well: | ||

| + | |||

| + | <pre> | ||

| + | double ave=0.0, A[MAX]; | ||

| + | int i; | ||

| + | |||

| + | #pragma omp parallel for reduction (+:ave) | ||

| + | for (i=0;i< MAX; i++) | ||

| + | { | ||

| + | ave + = A[i]; | ||

| + | } | ||

| + | ave = ave/MAX; | ||

| + | </pre> | ||

| + | |||

| + | * Other reduction operators are provided as well including subtraction, multiplication, division etc. | ||

| + | |||

| + | ==Barriers== | ||

| + | |||

| + | <pre> | ||

| + | #pragma omp parallel | ||

| + | { | ||

| + | somecode(); | ||

| + | // all threads wait until all threads get here | ||

| + | #pragma omp barrier | ||

| + | othercode(); | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | * These are very useful (and sometimes essential) to allow threads to all get to the same place. However, there are expensive in terms of efficiency since we are forcing the parallel processing back to eventually a single thread up to the barrier. | ||

| + | * Also there are times when you don't want the assumed barrier at the end of a '''parallel for''' loop. | ||

| + | |||

| + | <pre> | ||

| + | #pragma omp parallel | ||

| + | { | ||

| + | #pragma omp for nowait | ||

| + | for(i=0;i<N;i++) | ||

| + | { | ||

| + | B[i]=big_calc2(C, i); | ||

| + | } | ||

| + | A[id] = big_calc4(id); // this get called with a thread as we have indicated a nowait | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | |||

| + | ==Performance threading== | ||

| + | |||

| + | * Avoid false sharing possibilities, this will cause the greatest limitation to scaling | ||

| + | * Does your program justify the overhead of threads, if you're parallelizing out only a small '''for..loop''' the overhead cannot be justified and will increase processing time. | ||

| + | * Best choice of schedule might change with the system | ||

| + | * Minimize synchronization, use nowait where practical | ||

| + | * Locality, must systems are NUMA, modify your loop nest, or change loop order to get better cache behaviour | ||

| + | |||

| + | |||

| + | ==== C Example ==== | ||

| + | |||

| + | <pre style="background-color: #f5f5dc; color: black; font-family: monospace, sans-serif;"> | ||

| + | |||

| + | #include <stdio.h> | ||

| + | #include <stdlib.h> | ||

| + | #include <malloc.h> | ||

| + | |||

| + | /* compile with gcc -o test2 -fopenmp test2.c */ | ||

| + | |||

| + | int main(int argc, char** argv) | ||

| + | { | ||

| + | int i = 0; | ||

| + | int size = 20; | ||

| + | int* a = (int*) calloc(size, sizeof(int)); | ||

| + | int* b = (int*) calloc(size, sizeof(int)); | ||

| + | int* c; | ||

| + | |||

| + | for ( i = 0; i < size; i++ ) | ||

| + | { | ||

| + | a[i] = i; | ||

| + | b[i] = size-i; | ||

| + | printf("[BEFORE] At %d: a=%d, b=%d\n", i, a[i], b[i]); | ||

| + | } | ||

| + | |||

| + | #pragma omp parallel shared(a,b) private(c,i) | ||

| + | { | ||

| + | c = (int*) calloc(3, sizeof(int)); | ||

| + | |||

| + | #pragma omp for | ||

| + | for ( i = 0; i < size; i++ ) | ||

| + | { | ||

| + | c[0] = 5*a[i]; | ||

| + | c[1] = 2*b[i]; | ||

| + | c[2] = -2*i; | ||

| + | a[i] = c[0]+c[1]+c[2]; | ||

| + | |||

| + | c[0] = 4*a[i]; | ||

| + | c[1] = -1*b[i]; | ||

| + | c[2] = i; | ||

| + | b[i] = c[0]+c[1]+c[2]; | ||

| + | } | ||

| + | |||

| + | free(c); | ||

| + | } | ||

| + | |||

| + | for ( i = 0; i < size; i++ ) | ||

| + | { | ||

| + | printf("[AFTER] At %d: a=%d, b=%d\n", i, a[i], b[i]); | ||

| + | } | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | ==== Fortran Example ==== | ||

| + | |||

| + | <pre style="background-color: #f5f5dc; color: black; font-family: monospace, sans-serif;"> | ||

| + | |||

| + | program omp_par_do | ||

| + | implicit none | ||

| + | |||

| + | integer, parameter :: n = 100 | ||

| + | real, dimension(n) :: dat, result | ||

| + | integer :: i | ||

| + | |||

| + | !$OMP PARALLEL DO | ||

| + | do i = 1, n | ||

| + | result(i) = my_function(dat(i)) | ||

| + | end do | ||

| + | !$OMP END PARALLEL DO | ||

| + | |||

| + | contains | ||

| + | |||

| + | function my_function(d) result(y) | ||

| + | real, intent(in) :: d | ||

| + | real :: y | ||

| + | |||

| + | ! do something complex with data to calculate y | ||

| + | end function my_function | ||

| + | |||

| + | end program omp_par_do | ||

| + | |||

| + | </pre> | ||

| + | |||

| + | ==== Tips for programming ==== | ||

| + | |||

| + | * Mistyping the sentinel (e.g. !OMP or #pragma opm ) typically raises no error message. | ||

| + | * Don’t forget that private variables are uninitialized on entry to parallel regions. | ||

| + | * If you have large private data structures, it is possible to run out of stack space. | ||

| + | ** the size of the thread stack apart from the master thread can be controlled by the OMP_STACKSIZE environment variable. | ||

| + | * Writing code that works without OpenMP too. The macro _OPENMP is defined if the code is compiled with the OpenMP switch. | ||

| + | * Be aware The overhead of executing a parallel region is typically in the tens of microseconds range | ||

| + | ** depends on the compiler, hardware, no. of threads | ||

| + | * Tuning the chunk size for static or dynamic schedules can be tricky because the optimal chunk size can depend quite strongly on the number of threads. | ||

| + | * Make sure your timer actually does measure wall clock time. Do use '''omp_get_wtime()''' | ||

| + | |||

| + | |||

| + | ==== Compilation ==== | ||

| + | |||

| + | The program would be compiled in the following way, optional Intel compiler available too: | ||

| + | |||

| + | <pre style="background-color: black; color: white; border: 2px solid black; font-family: monospace, sans-serif;"> | ||

| + | |||

| + | For C | ||

| + | [username@login01 ~]$ module add gcc/10.2.0 | ||

| + | [username@login01 ~]$ gcc -o test2 -fopenmp test2.c | ||

| + | |||

| + | For Fortran | ||

| + | [username@login01 ~]$ module add gcc/10.2.0 | ||

| + | [username@login01 ~]$ gfortran -o test2 -fopenmp test2.c | ||

| + | |||

| + | </pre> | ||

| + | |||

| + | === Modules Available === | ||

| + | |||

| + | The following modules are available for OpenMP: | ||

| + | |||

| + | * module add gcc/10.2.0 (GNU compiler) | ||

| + | * module add intel/2018 (Intel compiler) | ||

| + | {| | ||

| + | |style="width:5%; border-width: 0;cellpadding=0" | [[File:icon_exclam3.png]] | ||

| + | |style="width:95%; border-width: 0;cellpadding=0" | OpenMP is a library directive within the compiler and does not require any additional module to be loaded. | ||

| + | |- | ||

| + | |} | ||

== Usage Examples == | == Usage Examples == | ||

| − | <pre style="background-color: #C8C8C8; color: black; border: 2px solid | + | * For openMP programs add in the line '''export OMP_NUM_THREADS=<number of threads>''' because SLURM cannot determine how many you need before runtime |

| + | |||

| + | === Batch example === | ||

| + | |||

| + | <pre style="background-color: #C8C8C8; color: black; border: 2px solid #C8C8C8; font-family: monospace, sans-serif;"> | ||

| + | |||

#!/bin/bash | #!/bin/bash | ||

| Line 25: | Line 419: | ||

#SBATCH -N 1 | #SBATCH -N 1 | ||

#SBATCH --ntasks-per-node 28 | #SBATCH --ntasks-per-node 28 | ||

| − | |||

#SBATCH -o %N.%j.%a.out | #SBATCH -o %N.%j.%a.out | ||

#SBATCH -e %N.%j.%a.err | #SBATCH -e %N.%j.%a.err | ||

| Line 34: | Line 427: | ||

module purge | module purge | ||

| − | module | + | module add gcc/10.2.0 |

export I_MPI_DEBUG=5 | export I_MPI_DEBUG=5 | ||

export I_MPI_FABRICS=shm:tmi | export I_MPI_FABRICS=shm:tmi | ||

export I_MPI_FALLBACK=no | export I_MPI_FALLBACK=no | ||

| + | |||

| + | export OMP_NUM_THREADS=28 | ||

/home/user/CODE_SAMPLES/OPENMP/demo | /home/user/CODE_SAMPLES/OPENMP/demo | ||

| Line 45: | Line 440: | ||

| − | <pre style="background-color: | + | <pre style="background-color: black; color: white; border: 2px solid black; font-family: monospace, sans-serif;"> |

[username@login01 ~]$ sbatch demo.job | [username@login01 ~]$ sbatch demo.job | ||

Submitted batch job 289552 | Submitted batch job 289552 | ||

</pre> | </pre> | ||

| + | |||

| + | ==== Hybrid OpenMP/MPI codes ==== | ||

| + | |||

| + | This programming model runs on one node but can be programmed as a hybrid model as well with MPI. | ||

| + | |||

| + | An application built with the hybrid model of parallel programming can run on a computer cluster using both OpenMP and Message Passing Interface (MPI), such that OpenMP is used for parallelism within a (multi-core) node while MPI is used for parallelism between nodes. There have also been efforts to run OpenMP on software-distributed shared memory systems. | ||

| + | |||

| + | Four possible performance reasons for mixed OpenMP/MPI codes: | ||

| + | |||

| + | * Replicated data | ||

| + | * Poorly scaling MPI codes | ||

| + | * Limited MPI process numbers | ||

| + | * MPI implementation not tuned for SMP clusters | ||

| + | |||

| + | |||

| + | ===openMP pragmas=== | ||

| + | |||

| + | {| class="wikitable" | ||

| + | | style="width:25%" | <Strong>OMP Construct</Strong> | ||

| + | | style="width:75%" | <Strong>Description</Strong> | ||

| + | |- | ||

| + | | #pragma omp parallel | ||

| + | | parallel region, teams of threads, structured block, interleaved execution | ||

| + | across threads | ||

| + | |- | ||

| + | | int omp_get_thread_num() | ||

| + | int omp_get_num_threads() | ||

| + | | Create threads with a parallel region and split up the work using the | ||

| + | number of threads and thread ID | ||

| + | |- | ||

| + | | double omp_get_wtime() | ||

| + | | speedup and Amdahl's law. | ||

| + | False Sharing and other performance issues | ||

| + | |- | ||

| + | | setenv OMP_NUM_THREADS N | ||

| + | | internal control variables. Setting the default number of threads with an | ||

| + | environment variable | ||

| + | |- | ||

| + | | #pragma omp barrier | ||

| + | #pragma omp critical | ||

| + | | Synchronization and race conditions. Revisit interleaved execution. | ||

| + | |- | ||

| + | | #pragma omp for | ||

| + | #pragma omp parallel for | ||

| + | | work sharing, parallel loops, loop carried dependencies | ||

| + | |- | ||

| + | | reduction(op:list) | ||

| + | | reductions of values across a team of threads | ||

| + | |- | ||

| + | | schedule(dynamic [,chunk]) | ||

| + | schedule (static [,chunk]) | ||

| + | | Loop schedules, loop overheads and load balance | ||

| + | |- | ||

| + | | private(list), firstprivate(list), shared(list) | ||

| + | | Data environment | ||

| + | |- | ||

| + | | nowait | ||

| + | | disabling implied barriers on workshare constructs, the high cost of | ||

| + | barriers. The flush concept (but not the concept) | ||

| + | |- | ||

| + | | #pragma omp single | ||

| + | | workshare with a single thread | ||

| + | |- | ||

| + | | #pragma omp task | ||

| + | #pragma omp taskwait | ||

| + | | tasks including the data environment for tasks. | ||

| + | |- | ||

| + | |} | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | == Next Steps == | ||

| + | |||

| + | |||

| + | * [https://en.wikipedia.org/wiki/OpenMP https://en.wikipedia.org/wiki/OpenMP] | ||

| + | * [http://www.openmp.org/ http://www.openmp.org/] | ||

| + | * [https://computing.llnl.gov/tutorials/openMP/ https://computing.llnl.gov/tutorials/openMP/] | ||

| + | * [http://fortranwiki.org/fortran/show/OpenMP http://fortranwiki.org/fortran/show/OpenMP] | ||

| + | * [https://www.youtube.com/watch?v=nE-xN4Bf8XI&list=PLLX-Q6B8xqZ8n8bwjGdzBJ25X2utwnoEG 'Youtube - Intel Training videos'] | ||

| + | |||

| + | |||

| + | * [https://en.wikipedia.org/wiki/Open_MPI https://en.wikipedia.org/wiki/Open_MPI] | ||

| + | * [https://www.open-mpi.org/ https://www.open-mpi.org/] | ||

| + | * [[programming/C|C Programming]] | ||

| + | * [[programming/C-Plusplus|C++ Programming]] | ||

| + | * [[programming/Fortran|Fortran Programming]] | ||

| + | * [[programming/Python|Python Programming]] | ||

| + | |||

| + | {{Librariespagenav}} | ||

Latest revision as of 10:37, 9 December 2022

Contents

[hide]Introduction to OpenMP

OpenMP (Open Multi-Processing) is an application programming interface (API) that supports multi-platform shared memory multiprocessing programming in C, C++, and Fortran on most platforms including our own HPC.

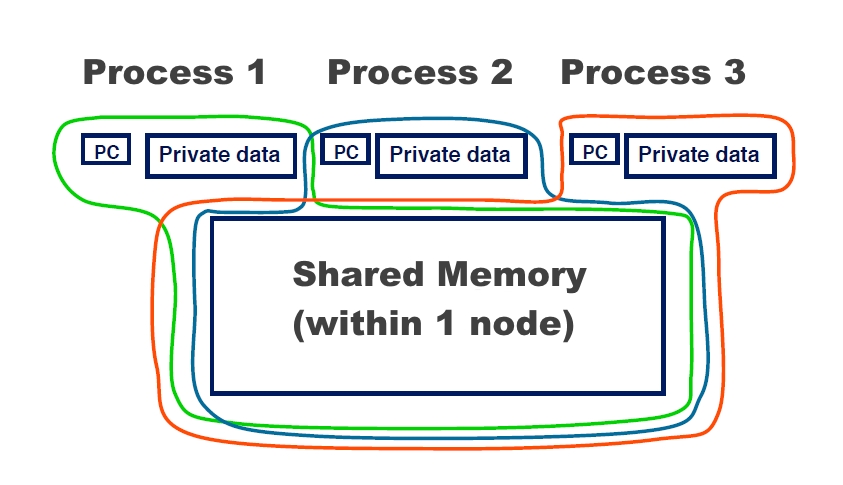

The programming model for shared memory is based on the notion of threads:

- Threads are like processes, except that threads can share the memory with each other (as well as have private memory)

- Shared data can be accessed by all threads

- Private data can only be accessed by the owning thread

- Different threads can follow different flows of control through the same program

- each thread has its own program counter

- Usually run one thread per CPU/core

- but could be more

- can have hardware support for multiple threads per core

An example of how this is implemented in computer memory is shown below:

Programming API

OpenMP is designed for multi-processor/core, shared memory machines. The underlying architecture can be shared memory UMA or NUMA.

It is an Application Program Interface (API) that may be used to explicitly direct multi-threaded, shared memory parallelism. Comprised of three primary API components:

- Compiler Directives

- Runtime Library Routines

- Environment Variables

OpenMP compiler directives are used for various purposes:

- Spawning a parallel region

- Dividing blocks of code among threads

- Distributing loop iterations between threads

- Serializing sections of code

- Synchronization of work among threads

Programming model

Within the idea of the shared memory model, we use the idea of threads which can share the memory with all the other threads. These also have the following characteristics:

- Private data can only be accessed by the thread owning it

- Each thread can run simultaneously with other threads but also asynchronously, so we need to be careful of race conditions.

- Usually we have one thread per processing core, although there may be hardware support for more (e.g. hyper-threading)

Thread Synchronization

As previously mention threads execute asynchronously, which means each thread proceeds through program instruction independently of other threads.

Although this makes for a very flexible system we must be very careful about the actions on shared variables that occur in the correct order.

- e.g. If we access a variable to read on thread 1 before thread 2 has written to it we will cause a program crash, likewise if updates to shared variables are accessed by different threads at the same time, one of the updates may get overwritten.

To prevent this happen we must either use variables that are independent of the different threads (ie different parts of an array) or perform some sort of synchronization within the code so different threads get to the same point at the same time.

First threaded program

Creating the most basic C program would be like the following:

#include<stdio.h>

int main()

{

printf(“ hello world\n”);

return 0;

}

To thread this we must tell the compiler which parts of the program to make into threads

#include<omp.h>

#include<stdio.h>

int main()

{

#pragma omp parallel

{

printf(“hello ");

printf("world\n”);

}

return 0;

}

Let us look at the extra components to make this a parallel-threaded program

- We have an openMP include file (#include <omp.h>)

- We use the #pragma omp parallel which tells the compiler the following region within the { } is the going to be executed as threads

To compile this we use the command:

$ gcc -fopenmp myprogram.c -o myprogram ( for the gcc compiler), or

$ icc -fopenmp myprogram.c -o myprogram (for the Intel compiler)

And when we run this we would get something like the following

$ ./myprogram hello hello world world hello hello world world

- Not very coherent but remember the threads all executed at different times, that is asynchronous and this is why we must be very careful about communicating between different threads of the same program.

Second threaded program

Although the previous program is threaded it does not represent a real-world example

#include<omp.h>

#include<stdio.h>

int main()

{

double A[1000];

omp_set_num_threads(4);

#pragma omp parallel

{

int ID = omp_get_thread_num();

pooh(ID, A);

}

}

Here each thread executes the same code independently, the only thing different is the omp thread ID is also passed to each call of pooh(ID,A).

All threads again wait for the end of the closing parallel region brace to finish before proceeding (i.e. a synchronization barrier).

In this program we always expect 4 threads to be given to us by the underlying operating system. Unfortunately, this may not happen and we will be allocated what the scheduler is prepared to offer. This could cause us serious program difficulties if we rely on a fixed number of threads every time.

We must call the openMP library (at runtime) how many threads we actually got, this is done with the following code:

#include<omp.h>

#include<stdio.h>

int main()

{

double A[1000];

omp_set_num_threads(4);

#pragma omp parallel

{

int ID = omp_get_thread_num();

int nthrds = omp_get_num_threads();

pooh(ID, A);

}

}

- Each thread calls pooh(ID,A) for ID = 0 to nthrds-1

- This program hard codes the number of threads requested to 4, this isn't a good way of programming. and would need re-compiling every time we changed this. A better way of doing this is to set the environment variable OMP_NUM_THREADS, and remove the omp_set_num_threads(4).

$ export OMP_NUM_THREADS=4 $ ./myprogram

Parallel loops

Loops are the main source of parallelism in many applications. If the iterations of a loop are independent (can be done in any order) then we can share out the iterations between different threads. OpenMP has a native call to do this efficiently:

#pragma omp parallel

{

#pragma omp for

for (loop=0;loop<N;loop++)

{

do_threaded_task(loop);

}

}

This is a much neater way and allows the compiler to perform optimizations automatically (unless otherwise stated). The variable loop is made 'private' by each thread by default. Also, all threads have to wait at the end of the parallel loop before proceeding past the end of this region.

One OpenMP shortcut is to put the parallel pragma in the parallel for part, which just makes the code more readable.

#define MAX = 100;

double data[MAX];

int loop;

#pragma omp parallel for

for (loop=0;loop< MAX; loop++)

{

data[loop] = process_data(loop);

}

There is a side effect of threading is called False sharing which can cause poor scaling. If independent data elements happen to sit on the same cache line, each update will cause the cache lines to “slosh back and forth” between threads hence each time the variable has to be re-loaded from the main memory.

One solution is to pad arrays so elements are on distinct cache lines, another method is to allow single threads to operate on a cache line completely. A better way is to re-write the program so this effect is avoided, an example is given below:

#include <omp.h>

static long num_steps = 100000; double step;

#define NUM_THREADS 2

void main ()

{

int nthreads; double pi=0.0;

step = 1.0/(double) num_steps;

omp_set_num_threads(NUM_THREADS);

#pragma omp parallel

{

int i, id, nthrds; double x, sum;

id = omp_get_thread_num();

nthrds = omp_get_num_threads();

if (id == 0)

nthreads = nthrds;

for (i=id, sum=0.0;i< num_steps; i=i+nthrds)

{

x = (i+0.5)*step;

sum += 4.0/(1.0+x*x);

}

#pragma omp critical

pi += sum * step;

}

}

- Here we create a scalar local to each thread to accumulate partial sums

- No array, no false sharing

- Within the parallel region we create a #pragma omp critical region to allow one thread at a time to enter to perform the summation of the result of pi.

This solution will scale much better than using an array-based design. Again because the above code of often seen in loops, openMP provides a reduction tool as well:

double ave=0.0, A[MAX];

int i;

#pragma omp parallel for reduction (+:ave)

for (i=0;i< MAX; i++)

{

ave + = A[i];

}

ave = ave/MAX;

- Other reduction operators are provided as well including subtraction, multiplication, division etc.

Barriers

#pragma omp parallel

{

somecode();

// all threads wait until all threads get here

#pragma omp barrier

othercode();

}

- These are very useful (and sometimes essential) to allow threads to all get to the same place. However, there are expensive in terms of efficiency since we are forcing the parallel processing back to eventually a single thread up to the barrier.

- Also there are times when you don't want the assumed barrier at the end of a parallel for loop.

#pragma omp parallel

{

#pragma omp for nowait

for(i=0;i<N;i++)

{

B[i]=big_calc2(C, i);

}

A[id] = big_calc4(id); // this get called with a thread as we have indicated a nowait

}

Performance threading

- Avoid false sharing possibilities, this will cause the greatest limitation to scaling

- Does your program justify the overhead of threads, if you're parallelizing out only a small for..loop the overhead cannot be justified and will increase processing time.

- Best choice of schedule might change with the system

- Minimize synchronization, use nowait where practical

- Locality, must systems are NUMA, modify your loop nest, or change loop order to get better cache behaviour

C Example

#include <stdio.h>

#include <stdlib.h>

#include <malloc.h>

/* compile with gcc -o test2 -fopenmp test2.c */

int main(int argc, char** argv)

{

int i = 0;

int size = 20;

int* a = (int*) calloc(size, sizeof(int));

int* b = (int*) calloc(size, sizeof(int));

int* c;

for ( i = 0; i < size; i++ )

{

a[i] = i;

b[i] = size-i;

printf("[BEFORE] At %d: a=%d, b=%d\n", i, a[i], b[i]);

}

#pragma omp parallel shared(a,b) private(c,i)

{

c = (int*) calloc(3, sizeof(int));

#pragma omp for

for ( i = 0; i < size; i++ )

{

c[0] = 5*a[i];

c[1] = 2*b[i];

c[2] = -2*i;

a[i] = c[0]+c[1]+c[2];

c[0] = 4*a[i];

c[1] = -1*b[i];

c[2] = i;

b[i] = c[0]+c[1]+c[2];

}

free(c);

}

for ( i = 0; i < size; i++ )

{

printf("[AFTER] At %d: a=%d, b=%d\n", i, a[i], b[i]);

}

}

Fortran Example

program omp_par_do

implicit none

integer, parameter :: n = 100

real, dimension(n) :: dat, result

integer :: i

!$OMP PARALLEL DO

do i = 1, n

result(i) = my_function(dat(i))

end do

!$OMP END PARALLEL DO

contains

function my_function(d) result(y)

real, intent(in) :: d

real :: y

! do something complex with data to calculate y

end function my_function

end program omp_par_do

Tips for programming

- Mistyping the sentinel (e.g. !OMP or #pragma opm ) typically raises no error message.

- Don’t forget that private variables are uninitialized on entry to parallel regions.

- If you have large private data structures, it is possible to run out of stack space.

- the size of the thread stack apart from the master thread can be controlled by the OMP_STACKSIZE environment variable.

- Writing code that works without OpenMP too. The macro _OPENMP is defined if the code is compiled with the OpenMP switch.

- Be aware The overhead of executing a parallel region is typically in the tens of microseconds range

- depends on the compiler, hardware, no. of threads

- Tuning the chunk size for static or dynamic schedules can be tricky because the optimal chunk size can depend quite strongly on the number of threads.

- Make sure your timer actually does measure wall clock time. Do use omp_get_wtime()

Compilation

The program would be compiled in the following way, optional Intel compiler available too:

For C [username@login01 ~]$ module add gcc/10.2.0 [username@login01 ~]$ gcc -o test2 -fopenmp test2.c For Fortran [username@login01 ~]$ module add gcc/10.2.0 [username@login01 ~]$ gfortran -o test2 -fopenmp test2.c

Modules Available

The following modules are available for OpenMP:

- module add gcc/10.2.0 (GNU compiler)

- module add intel/2018 (Intel compiler)

| |

OpenMP is a library directive within the compiler and does not require any additional module to be loaded. |

Usage Examples

- For openMP programs add in the line export OMP_NUM_THREADS=<number of threads> because SLURM cannot determine how many you need before runtime

Batch example

#!/bin/bash #SBATCH -J openmpi-single-node #SBATCH -N 1 #SBATCH --ntasks-per-node 28 #SBATCH -o %N.%j.%a.out #SBATCH -e %N.%j.%a.err #SBATCH -p compute #SBATCH --exclusive echo $SLURM_JOB_NODELIST module purge module add gcc/10.2.0 export I_MPI_DEBUG=5 export I_MPI_FABRICS=shm:tmi export I_MPI_FALLBACK=no export OMP_NUM_THREADS=28 /home/user/CODE_SAMPLES/OPENMP/demo

[username@login01 ~]$ sbatch demo.job Submitted batch job 289552

Hybrid OpenMP/MPI codes

This programming model runs on one node but can be programmed as a hybrid model as well with MPI.

An application built with the hybrid model of parallel programming can run on a computer cluster using both OpenMP and Message Passing Interface (MPI), such that OpenMP is used for parallelism within a (multi-core) node while MPI is used for parallelism between nodes. There have also been efforts to run OpenMP on software-distributed shared memory systems.

Four possible performance reasons for mixed OpenMP/MPI codes:

- Replicated data

- Poorly scaling MPI codes

- Limited MPI process numbers

- MPI implementation not tuned for SMP clusters

openMP pragmas

| OMP Construct | Description |

| #pragma omp parallel | parallel region, teams of threads, structured block, interleaved execution

across threads |

| int omp_get_thread_num()

int omp_get_num_threads() |

Create threads with a parallel region and split up the work using the

number of threads and thread ID |

| double omp_get_wtime() | speedup and Amdahl's law.

False Sharing and other performance issues |

| setenv OMP_NUM_THREADS N | internal control variables. Setting the default number of threads with an

environment variable |

#pragma omp barrier

|

Synchronization and race conditions. Revisit interleaved execution. |

#pragma omp for

|

work sharing, parallel loops, loop carried dependencies |

| reduction(op:list) | reductions of values across a team of threads |

| schedule(dynamic [,chunk])

schedule (static [,chunk]) |

Loop schedules, loop overheads and load balance |

| private(list), firstprivate(list), shared(list) | Data environment |

| nowait | disabling implied barriers on workshare constructs, the high cost of

barriers. The flush concept (but not the concept) |

| #pragma omp single | workshare with a single thread |

#pragma omp task

|

tasks including the data environment for tasks. |

Next Steps

- https://en.wikipedia.org/wiki/OpenMP

- http://www.openmp.org/

- https://computing.llnl.gov/tutorials/openMP/

- http://fortranwiki.org/fortran/show/OpenMP

- 'Youtube - Intel Training videos'