Difference between revisions of "DAIM Guide"

(→Available Resource) |

(→Available Resource) |

||

| (19 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

__TOC__ | __TOC__ | ||

| − | + | ||

== Introduction == | == Introduction == | ||

| − | Viper, the University of Hull’s High Performance Computing facility, is used by research staff and students in many disciplines across the University and is a potentially significant tool for those with any | + | Viper, the University of Hull’s High-Performance Computing facility, is used by research staff and students in many disciplines across the University and is a potentially significant tool for those with any computational element to their research. |

| − | Viper is a ‘cluster’ of approximately 200 computes built into a cluster | + | Viper is a ‘cluster’ of approximately 200 computes built into a cluster and features more than 6000 compute cores, high memory systems and GPU technology together with dedicated high-performance storage and fast interconnect between systems to meet the needs of the most computationally demanding tasks. |

Some notes about | Some notes about | ||

* Viper runs Linux, though to make use of Viper you do not need to be a Linux expert – being familiar with just a few commands is enough to get started. | * Viper runs Linux, though to make use of Viper you do not need to be a Linux expert – being familiar with just a few commands is enough to get started. | ||

| − | * Viper runs a scheduler, a piece of software which manages access to the computers across the cluster, monitoring what is running and how resources such as memory, | + | * Viper runs a scheduler, a piece of software which manages access to the computers across the cluster, monitoring what is running and how resources such as memory, CPU processors and GPU cards are being used. |

| − | * Users run ‘jobs’ some of which are interactive (like Jupyter Notebook sessions) but many of which are submitted to run automatically without user interaction. Jobs usually have a batch script, a text file containing recipe for the job, for example what resource is needed and what the job should do. | + | * Users run ‘jobs’ some of which are interactive (like Jupyter Notebook sessions) but many of which are submitted to run automatically without user interaction. Jobs usually have a batch script, a text file containing the recipe for the job, for example, what resource is needed and what the job should do. |

== Prerequisites == | == Prerequisites == | ||

| − | * Viper account - | + | * Viper account - '''Access for a Viper account should be completed by the MSc project supervisor on behalf of the student and confirms that the requirements for Viper access have been discussed and are supported by the project supervisor.''' To apply for a Viper account, please see [https://hull.service-now.com/staff?id=sc_cat_item&table=sc_cat_item&sys_id=5f7e08cb1b694610998b6204b24bcbbf DAIM MSc Project Account Request] |

| − | * VPN - | + | * VPN - You will need a VPN account to access Viper from off-campus. Please see [https://hull.service-now.com/ GlobalProtect Client VPN Service page on the Support Portal] |

| + | |||

| + | == Using Viper OnDemand == | ||

| + | === OnDemand Web Portal Access === | ||

| + | We are currently testing a web route to provide Jupyter access via our pilot Viper OnDemand web portal - see http://hpc.mediawiki.hull.ac.uk/General/OOD for more information. This service is in testing, please raise any issues via the University support portal, searching for '''Viper''' to find forms to help you request support. | ||

| + | |||

| + | ==== Connecting to Viper OnDemand ==== | ||

| + | #'''Incognito mode''' - it is recommended to connect to Viper OnDemand from a private or incognito browser window. This is due to some caching issues making your browser think you are logged in when you are not. | ||

| + | #https://viperood.hpc.hull.ac.uk | ||

| + | <!--* Logging in to Viper OnDemand is via Microsoft 365 MFA page (though MFA is not currently configured). Enter your email address, click Next,--> | ||

| + | #Log in using your university credentials - note unlike logging into Viper via SSH, in this situation you need to log in with your University email address. | ||

| + | [[File:Newsso.jpg]] | ||

| + | |||

| + | '''Please note:''' | ||

| + | * VPN access is still required to connect to the Viper OnDemand web portal. | ||

| + | * The DAIM Jupyter apps are in testing and there may be issues which have yet to be resolved. | ||

== Available Resource == | == Available Resource == | ||

| − | '''Important''' While Viper has a range of GPU resources available for DAIM student use, including Nvidia A100 and Nvidia A40 based systems, such resource is in very high demand and as such at times there may be many people competing for GPU | + | '''Important''' '''While Viper has a range of GPU resources available for DAIM student use, including Nvidia A100 and Nvidia A40-based systems, such resource is in very high demand and as such at times there may be many people competing for GPU resources meaning long pend (queuing) times are likely.''' |

Viper is a shared resource, so to help alleviate this for all users, could we suggest: | Viper is a shared resource, so to help alleviate this for all users, could we suggest: | ||

* Where possible, try and only run '''final''' GPU runs on Viper, and '''keep development of code to either other systems (e.g. own computer, DAIM lab computer or Viper CPU nodes)''' | * Where possible, try and only run '''final''' GPU runs on Viper, and '''keep development of code to either other systems (e.g. own computer, DAIM lab computer or Viper CPU nodes)''' | ||

| − | * Don't request longer runtimes than | + | * Don't request longer runtimes than are required or if you have resources allocated to you but are not going to work for a period of time ''' please cancel your allocation''' |

* Please consider other users require GPU access, '''don't queue up multiple Jupyter sessions''' | * Please consider other users require GPU access, '''don't queue up multiple Jupyter sessions''' | ||

| Line 32: | Line 47: | ||

! Notes | ! Notes | ||

|- | |- | ||

| − | | style="text-align:left;" | Nvidia | + | | style="text-align:left;" | Nvidia A100 MIG 40GB |

| − | | | + | | 40GB |

| − | | | + | | 64GB |

| − | | | + | | 4 |

| − | | style="text-align:left;" | [[DAIM_Guide# | + | | style="text-align:left;" | [[DAIM_Guide#Web_Portal_Nvidia_A100_GPU_MIG_.28Multi-Instance_GPU.29_access | Web portal MIG version]] |

| − | + | | style="text-align:left;" | High demand | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | | style="text-align:left;" | | ||

|- | |- | ||

| style="text-align:left;" | Nvidia A100 MIG 20GB | | style="text-align:left;" | Nvidia A100 MIG 20GB | ||

| 20GB | | 20GB | ||

| 64GB | | 64GB | ||

| − | | | + | | 2 |

| style="text-align:left;" | [[DAIM_Guide#Web_Portal_Nvidia_A100_GPU_MIG_.28Multi-Instance_GPU.29_access | Web portal MIG version]] | | style="text-align:left;" | [[DAIM_Guide#Web_Portal_Nvidia_A100_GPU_MIG_.28Multi-Instance_GPU.29_access | Web portal MIG version]] | ||

| style="text-align:left;" | High demand | | style="text-align:left;" | High demand | ||

| Line 56: | Line 64: | ||

| 10GB | | 10GB | ||

| 32GB | | 32GB | ||

| − | | | + | | 1 |

| style="text-align:left;" | [[DAIM_Guide#Web_Portal_Nvidia_A100_GPU_MIG_.28Multi-Instance_GPU.29_access | Web portal MIG version]] | | style="text-align:left;" | [[DAIM_Guide#Web_Portal_Nvidia_A100_GPU_MIG_.28Multi-Instance_GPU.29_access | Web portal MIG version]] | ||

| style="text-align:left;" | Medium demand | | style="text-align:left;" | Medium demand | ||

| + | <!-- | ||

|- | |- | ||

| style="text-align:left;" | CPU only | | style="text-align:left;" | CPU only | ||

| Line 66: | Line 75: | ||

| style="text-align:left;" | [[DAIM_Guide#Alternative_.28previous.29_method:_Jupyter_Notebook_via_SSH_tunnel_on_Viper | Viper SSH tunnel]] | | style="text-align:left;" | [[DAIM_Guide#Alternative_.28previous.29_method:_Jupyter_Notebook_via_SSH_tunnel_on_Viper | Viper SSH tunnel]] | ||

| style="text-align:left;" | Lower demand but low performance for ML. Use for development and testing | | style="text-align:left;" | Lower demand but low performance for ML. Use for development and testing | ||

| + | --> | ||

|- | |- | ||

| style="text-align:left;" | CPU only | | style="text-align:left;" | CPU only | ||

| Line 73: | Line 83: | ||

| style="text-align:left;" | [[DAIM_Guide#Pilot_Web_Portal_Access Ondemand | Web portal]] | | style="text-align:left;" | [[DAIM_Guide#Pilot_Web_Portal_Access Ondemand | Web portal]] | ||

| style="text-align:left;" | Lower demand but low performance for ML. Use for development and testing | | style="text-align:left;" | Lower demand but low performance for ML. Use for development and testing | ||

| + | <!-- | ||

| + | |- | ||

| + | | style="text-align:left;" | Nvidia A40 | ||

| + | | 48GB | ||

| + | | 128GB | ||

| + | | 2 | ||

| + | | style="text-align:left;" | [[DAIM_Guide#Alternative_.28previous.29_method:_Jupyter_Notebook_via_SSH_tunnel_on_Viper | Viper SSH tunnel]] | ||

| + | | style="text-align:left;" | Very high demand, expect long wait times - these should only be used if the task exceeds GPU memory available on MIG instances | ||

| + | --> | ||

| + | |- | ||

| + | | style="text-align:left;" | NVidia A40 | ||

| + | | 48GB | ||

| + | | 128GB | ||

| + | | 2 | ||

| + | | style="text-align:left;" | [[DAIM_Guide#Web_Portal_Nvidia_A40_GPU_access | Web portal]] | ||

| + | | style="text-align:left;" | Very high demand, expect long wait times - these should only be used if the task exceeds GPU memory available on MIG instances | ||

|} | |} | ||

| − | <nowiki>*</nowiki> There are two specifications of DAIM lab PCs, the majority are equipped with Nvidia GeForce RTX 3070 GPU cards, with 8GB of GPU memory - all GPU instances on Viper have at least an equivalent amount of GPU memory available. There are also | + | <nowiki>*</nowiki> There are two specifications of DAIM lab PCs, the majority are equipped with Nvidia GeForce RTX 3070 GPU cards, with 8GB of GPU memory - all GPU instances on Viper have at least an equivalent amount of GPU memory available. There are also several lab PCs (dual monitor lab machines) with Nvidia RTX 3090 GPU cards with 24GB of GPU memory. |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | If you connect to the web portal, you will see | + | == DAIM Specific Web Portal Apps == |

| + | If you connect to the web portal, you will see several DAIM prefixed apps, which can be used to connect: | ||

==== Create Python Virtual Environment ==== | ==== Create Python Virtual Environment ==== | ||

* '''DAIM Create Environment''' - this will create a base Python virtual environment. You can change the name of the base environment by changing the text in the Environment Name box. You can then select this environment kernel to use when in DAIM session notebooks. | * '''DAIM Create Environment''' - this will create a base Python virtual environment. You can change the name of the base environment by changing the text in the Environment Name box. You can then select this environment kernel to use when in DAIM session notebooks. | ||

| Line 95: | Line 113: | ||

==== Web Portal Nvidia A100 GPU MIG (Multi-Instance GPU) access ==== | ==== Web Portal Nvidia A100 GPU MIG (Multi-Instance GPU) access ==== | ||

| − | * ''' | + | * '''DAIM Jupyter Desktop MIG''' - this will launch a Jupyter session but with the web browser running on Viper OnDemand. This means you should be able to disconnect and reconnect with cells remaining active. Options are between a 10GB and 20GB VRAM instance. Please only select what is required. |

| − | * ''' | + | * '''DAIM Jupyter MIG''' - this will launch a Jupyter lab session in your browser tab. Options are between a 10GB and 20GB VRAM instance. Please only select what is required. |

==== Web Portal Nvidia A100 GPU MIG (Multi-Instance GPU) automated run ==== | ==== Web Portal Nvidia A100 GPU MIG (Multi-Instance GPU) automated run ==== | ||

| − | * '''TEST DAIM nbconvert Job''' - | + | * '''TEST DAIM nbconvert Job''' - This will submit a task to run Jupyter-nbconvert to automatically execute the specific notebook when the resource is available without you needing to interact with the notebook. Specify a notebook using the "select path" button and click launch. Once complete nbconvert will produce an output notebook with nbconvert in the name (e.g. the input mynotebook.ipynb will produce mynotebook.nbconvert.ipynb). After you launch the task, it will just run automatically when the resource is available without you needing to interact with it and will be listed as "Running" in your session list. Note that there is very little in terms of output until the notebook execution is complete. Options are between a 10GB and 20GB VRAM instance. Please only select what is required. |

| + | |||

| + | ==== Web Portal GPU and Job Status Page ==== | ||

| + | To help work out what GPU resources are available for use, and how long a queued job may take, there is a monitor tool on the web portal accessible via '''Jobs > GPU Status and current jobs'''. | ||

| + | |||

| + | '''Warning:''' The first time this is run you will be presented with a warning about needing to restart your web server - doing this may terminate running Jupyter sessions, so do not proceed if you have active sessions. | ||

| + | <!-- | ||

== Alternative (previous) method: Jupyter Notebook via SSH tunnel on Viper == | == Alternative (previous) method: Jupyter Notebook via SSH tunnel on Viper == | ||

| − | + | ''' THIS APPROACH IS NO LONGER RECOMMENDED''': | |

| − | [ | + | [https://hpc.mediawiki.hull.ac.uk/DAIM_Old Previous Approach - Jupyter Notebook via SSH tunnel on Viper] |

| + | --> | ||

Latest revision as of 15:50, 1 July 2025

Contents

Introduction

Viper, the University of Hull’s High-Performance Computing facility, is used by research staff and students in many disciplines across the University and is a potentially significant tool for those with any computational element to their research. Viper is a ‘cluster’ of approximately 200 computes built into a cluster and features more than 6000 compute cores, high memory systems and GPU technology together with dedicated high-performance storage and fast interconnect between systems to meet the needs of the most computationally demanding tasks. Some notes about

- Viper runs Linux, though to make use of Viper you do not need to be a Linux expert – being familiar with just a few commands is enough to get started.

- Viper runs a scheduler, a piece of software which manages access to the computers across the cluster, monitoring what is running and how resources such as memory, CPU processors and GPU cards are being used.

- Users run ‘jobs’ some of which are interactive (like Jupyter Notebook sessions) but many of which are submitted to run automatically without user interaction. Jobs usually have a batch script, a text file containing the recipe for the job, for example, what resource is needed and what the job should do.

Prerequisites

- Viper account - Access for a Viper account should be completed by the MSc project supervisor on behalf of the student and confirms that the requirements for Viper access have been discussed and are supported by the project supervisor. To apply for a Viper account, please see DAIM MSc Project Account Request

- VPN - You will need a VPN account to access Viper from off-campus. Please see GlobalProtect Client VPN Service page on the Support Portal

Using Viper OnDemand

OnDemand Web Portal Access

We are currently testing a web route to provide Jupyter access via our pilot Viper OnDemand web portal - see http://hpc.mediawiki.hull.ac.uk/General/OOD for more information. This service is in testing, please raise any issues via the University support portal, searching for Viper to find forms to help you request support.

Connecting to Viper OnDemand

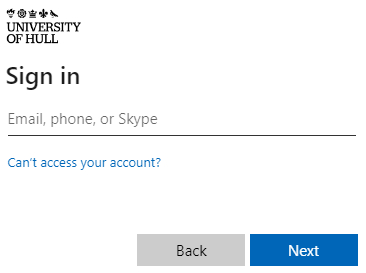

- Incognito mode - it is recommended to connect to Viper OnDemand from a private or incognito browser window. This is due to some caching issues making your browser think you are logged in when you are not.

- https://viperood.hpc.hull.ac.uk

- Log in using your university credentials - note unlike logging into Viper via SSH, in this situation you need to log in with your University email address.

Please note:

- VPN access is still required to connect to the Viper OnDemand web portal.

- The DAIM Jupyter apps are in testing and there may be issues which have yet to be resolved.

Available Resource

Important While Viper has a range of GPU resources available for DAIM student use, including Nvidia A100 and Nvidia A40-based systems, such resource is in very high demand and as such at times there may be many people competing for GPU resources meaning long pend (queuing) times are likely.

Viper is a shared resource, so to help alleviate this for all users, could we suggest:

- Where possible, try and only run final GPU runs on Viper, and keep development of code to either other systems (e.g. own computer, DAIM lab computer or Viper CPU nodes)

- Don't request longer runtimes than are required or if you have resources allocated to you but are not going to work for a period of time please cancel your allocation

- Please consider other users require GPU access, don't queue up multiple Jupyter sessions

| Resource Type | GPU RAM * | System RAM | Count | Accessible as | Notes |

|---|---|---|---|---|---|

| Nvidia A100 MIG 40GB | 40GB | 64GB | 4 | Web portal MIG version | High demand |

| Nvidia A100 MIG 20GB | 20GB | 64GB | 2 | Web portal MIG version | High demand |

| Nvidia A100 MIG 10GB | 10GB | 32GB | 1 | Web portal MIG version | Medium demand |

| CPU only | - | 128GB / 1TB | 10+ | Web portal | Lower demand but low performance for ML. Use for development and testing |

| NVidia A40 | 48GB | 128GB | 2 | Web portal | Very high demand, expect long wait times - these should only be used if the task exceeds GPU memory available on MIG instances |

* There are two specifications of DAIM lab PCs, the majority are equipped with Nvidia GeForce RTX 3070 GPU cards, with 8GB of GPU memory - all GPU instances on Viper have at least an equivalent amount of GPU memory available. There are also several lab PCs (dual monitor lab machines) with Nvidia RTX 3090 GPU cards with 24GB of GPU memory.

DAIM Specific Web Portal Apps

If you connect to the web portal, you will see several DAIM prefixed apps, which can be used to connect:

Create Python Virtual Environment

- DAIM Create Environment - this will create a base Python virtual environment. You can change the name of the base environment by changing the text in the Environment Name box. You can then select this environment kernel to use when in DAIM session notebooks.

Web Portal Nvidia A40 GPU access

- DAIM Jupyter - this will launch a Jupyter lab session in your browser tab

- DAIM Jupyter Desktop - similarly, this will launch a Jupyter session but with the web browser running on Viper OnDemand. This means you should be able to disconnect and reconnect with cells remaining active.

Web Portal Nvidia A100 GPU MIG (Multi-Instance GPU) access

- DAIM Jupyter Desktop MIG - this will launch a Jupyter session but with the web browser running on Viper OnDemand. This means you should be able to disconnect and reconnect with cells remaining active. Options are between a 10GB and 20GB VRAM instance. Please only select what is required.

- DAIM Jupyter MIG - this will launch a Jupyter lab session in your browser tab. Options are between a 10GB and 20GB VRAM instance. Please only select what is required.

Web Portal Nvidia A100 GPU MIG (Multi-Instance GPU) automated run

- TEST DAIM nbconvert Job - This will submit a task to run Jupyter-nbconvert to automatically execute the specific notebook when the resource is available without you needing to interact with the notebook. Specify a notebook using the "select path" button and click launch. Once complete nbconvert will produce an output notebook with nbconvert in the name (e.g. the input mynotebook.ipynb will produce mynotebook.nbconvert.ipynb). After you launch the task, it will just run automatically when the resource is available without you needing to interact with it and will be listed as "Running" in your session list. Note that there is very little in terms of output until the notebook execution is complete. Options are between a 10GB and 20GB VRAM instance. Please only select what is required.

Web Portal GPU and Job Status Page

To help work out what GPU resources are available for use, and how long a queued job may take, there is a monitor tool on the web portal accessible via Jobs > GPU Status and current jobs.

Warning: The first time this is run you will be presented with a warning about needing to restart your web server - doing this may terminate running Jupyter sessions, so do not proceed if you have active sessions.