Difference between revisions of "Scaling"

(Created page with "__TOC__ ==Scaling Jobs== HPC by its very nature can use multiple cores and nodes to scale (and speed up a job) by using more processing units. This is an assumption which...") |

m |

||

| Line 8: | Line 8: | ||

* This page will look more deeply into this area by splitting the potential issues down: | * This page will look more deeply into this area by splitting the potential issues down: | ||

| + | |||

| + | ===Memory Bandwidth=== | ||

| + | |||

| + | When running on many cores with memory access there is only a finite bandwidth of memory access (even with modern memory channels), so acceleration even with a node is not linear and with the effect of [https://en.wikipedia.org/wiki/False_sharing false caching]; performance can be degraded significantly more. | ||

| + | |||

| + | ===Node Interconnect Bandwith=== | ||

| + | |||

| + | When using MPI across many nodes remember your program needs to send and receive data across the interconnect (the fast network which connects all the nodes together). Again, it is only a very few programs that can scale to a large number of nodes effeciently. Most programs will have a 'sweet spot' (for example 16 nodes), or they will provide very little improvement (< 1% say) for doubling the amount of computing resource deployed to them. In most cases this can only be observed through benchtesting, and is difficult to theoretically calculate. | ||

| + | |||

| + | [[File:Scaling_amdahl.png]] | ||

| + | |||

| + | * '''s''' is the amount of time spent in not parallel tasks which could include data transfers like MPI and disk | ||

| + | |||

| + | ===Disk I/O Bandwidth=== | ||

| + | |||

| + | ===GPU considerations=== | ||

Revision as of 11:30, 18 December 2019

Contents

Scaling Jobs

HPC by its very nature can use multiple cores and nodes to scale (and speed up a job) by using more processing units.

This is an assumption which can be seriously flawed once we understand the nature of the software and hardware combination.

- This page will look more deeply into this area by splitting the potential issues down:

Memory Bandwidth

When running on many cores with memory access there is only a finite bandwidth of memory access (even with modern memory channels), so acceleration even with a node is not linear and with the effect of false caching; performance can be degraded significantly more.

Node Interconnect Bandwith

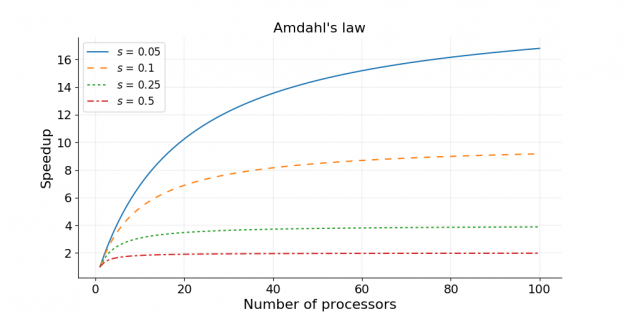

When using MPI across many nodes remember your program needs to send and receive data across the interconnect (the fast network which connects all the nodes together). Again, it is only a very few programs that can scale to a large number of nodes effeciently. Most programs will have a 'sweet spot' (for example 16 nodes), or they will provide very little improvement (< 1% say) for doubling the amount of computing resource deployed to them. In most cases this can only be observed through benchtesting, and is difficult to theoretically calculate.

- s is the amount of time spent in not parallel tasks which could include data transfers like MPI and disk