Difference between revisions of "FurtherTopics/Additional Information On Viper"

From HPC

(→Accelerator Nodes) |

m (→Infrastructure) |

||

| (13 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | == | + | === Physical Hardware === |

| + | Viper is based on the Linux operating system and is composed of approximately 5,500 processing cores with the following specialised areas: | ||

| + | * 180 compute nodes, each with 2x 14-core Intel Broadwell E5-2680v4 processors (2.4 –3.3 GHz), 128 GB DDR4 RAM | ||

| + | * 4 High memory nodes, each with 4x 10-core Intel Haswell E5-4620v3 processors (2.0 GHz), 1TB DDR4 RAM | ||

| + | * 4 GPU nodes, each identical to compute nodes with the addition of an Nvidia Ampere A40 GPU per node | ||

| + | * 2 Visualisations nodes with 2x Nvidia GTX 980TI | ||

| + | * Intel [http://www.intel.com/content/www/us/en/high-performance-computing-fabrics/omni-path-architecture-fabric-overview.html Omni-Path] interconnect (100 Gb/s node-switch and switch-switch) | ||

| + | * 500 TB parallel file system ([http://www.beegfs.com/ BeeGFS]) | ||

| − | + | The compute nodes ('''compute''' and highmem ) are '''stateless''', with the GPU and visualisation nodes being stateful. | |

| − | + | * Note: the compute nodes that have no persistent operating system storage between boots (e.g. originates from hard drives being the persistent storage mechanism). Stateless nodes do not necessarily imply diskless, as ours have 128Gb SSD temporary space too. | |

| − | == | + | == Infrastructure == |

| − | |||

| − | |||

| − | + | * 4 racks with dedicated cooling and hot-aisle containment (see diagram below) | |

| − | + | * Additional rack for storage and management components | |

| + | * Dedicated, high-efficiency chiller on AS3 roof for cooling | ||

| + | * UPS and generator power failover | ||

| − | + | [[File:Rack-diagram.jpg]] | |

| − | |||

| − | === | + | {| class="wikitable" |

| − | These are | + | | style="width:20%" | <Strong>Image</Strong> |

| + | | style="width:5%" | <Strong>Quantity</Strong> | ||

| + | | style="width:75%" | <Strong>Description</Strong> | ||

| + | |- | ||

| + | | [[File:Node-compute.png]] | ||

| + | | '''180''' | ||

| + | | These are the main processing cluster nodes, each has Intel Broadwell E5-2680v4 28x cores and 128GB of RAM | ||

| + | |- | ||

| + | | [[File:Node-himem.jpg]] | ||

| + | | '''4''' | ||

| + | | These are specialised high memory nodes, each has Intel Haswell E5-4620v3 40x cores and 1 TB of RAM | ||

| + | |- | ||

| + | | [[File:Node-visualisation.jpg]] | ||

| + | | '''4''' | ||

| + | | These are specialised accelerator nodes with an Nvidia A40 per node and 128 GB of RAM | ||

| + | |- | ||

| + | | [[File:Node-visualisation.jpg]] | ||

| + | | '''1''' | ||

| + | | This is a specialised accelerator node with 2 Nvidia P100 per node and 128 GB of RAM | ||

| + | |- | ||

| + | | [[File:Node-visualisation.jpg]] | ||

| + | | '''2''' | ||

| + | | These are visualisation nodes that allow remote graphical viewing of data using Nvidia GeForce GTX 980 Ti. | ||

| + | |- | ||

| + | |} | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | [[Main Page | Main Page]] / [[FurtherTopics/FurtherTopics #Further Reading| Further Topics]] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

Latest revision as of 09:41, 16 November 2022

Physical Hardware

Viper is based on the Linux operating system and is composed of approximately 5,500 processing cores with the following specialised areas:

- 180 compute nodes, each with 2x 14-core Intel Broadwell E5-2680v4 processors (2.4 –3.3 GHz), 128 GB DDR4 RAM

- 4 High memory nodes, each with 4x 10-core Intel Haswell E5-4620v3 processors (2.0 GHz), 1TB DDR4 RAM

- 4 GPU nodes, each identical to compute nodes with the addition of an Nvidia Ampere A40 GPU per node

- 2 Visualisations nodes with 2x Nvidia GTX 980TI

- Intel Omni-Path interconnect (100 Gb/s node-switch and switch-switch)

- 500 TB parallel file system (BeeGFS)

The compute nodes (compute and highmem ) are stateless, with the GPU and visualisation nodes being stateful.

- Note: the compute nodes that have no persistent operating system storage between boots (e.g. originates from hard drives being the persistent storage mechanism). Stateless nodes do not necessarily imply diskless, as ours have 128Gb SSD temporary space too.

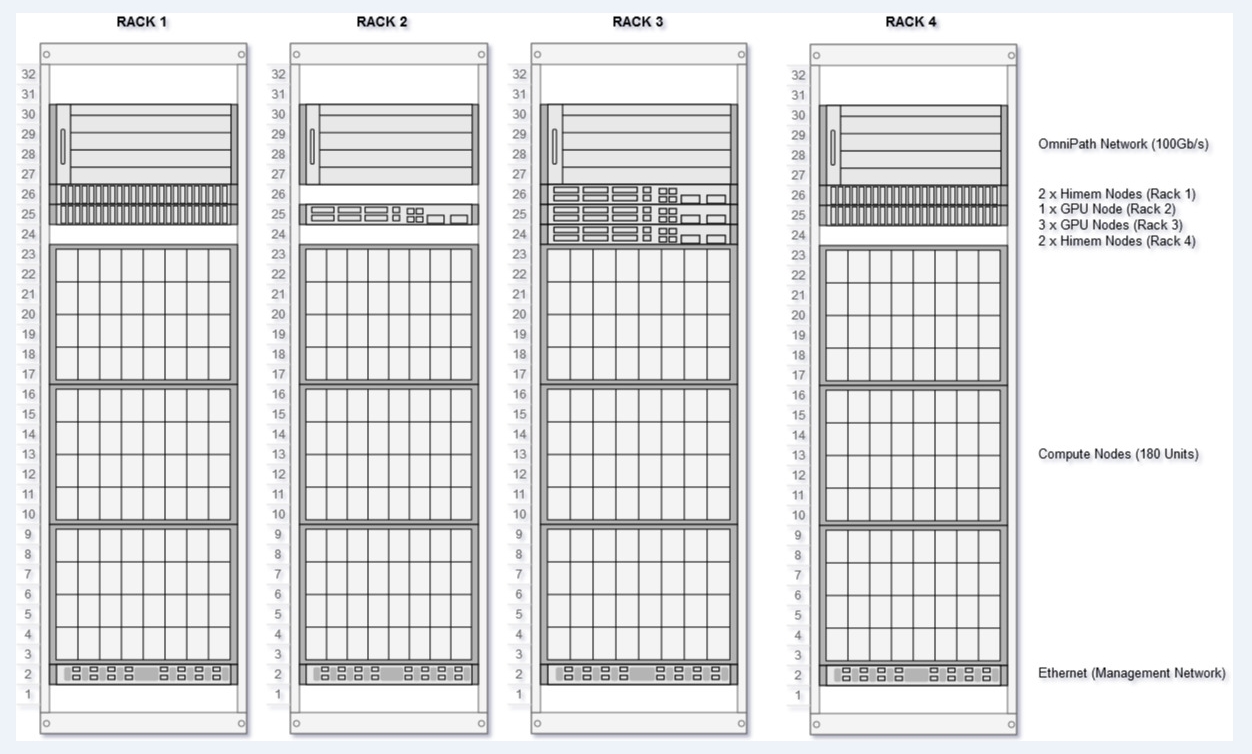

Infrastructure

- 4 racks with dedicated cooling and hot-aisle containment (see diagram below)

- Additional rack for storage and management components

- Dedicated, high-efficiency chiller on AS3 roof for cooling

- UPS and generator power failover