Difference between revisions of "Programming/OpenMPI"

m |

m (→C Example) |

||

| (37 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

== Programming Details == | == Programming Details == | ||

| − | MPI defines not only point-to-point communication (e.g., send and receive), | + | '''MPI''' defines not only point-to-point communication (e.g., send and receive), but also defines other communication patterns, such as collective communication. Collective operations are where multiple processes are involved in single communication action. Reliable broadcast, for example, is where one process has a message at the beginning of the operation, and at the end of the operation, all processes in a group have the message. |

| − | Message-passing performance and resource utilization are the king and queen of high-performance computing. Open MPI was | + | Message-passing performance and resource utilization are the king and queen of high-performance computing. Open MPI was explicitly designed in such a way that it could operate at the very bleeding edge of high performance: incredibly low latencies for sending short messages, extremely high short message injection rates on supported networks, fast ramp-ups to maximum bandwidth for large messages, etc. |

The Open MPI code has 3 major code modules: | The Open MPI code has 3 major code modules: | ||

| Line 10: | Line 10: | ||

* ORTE - the Open Run-Time Environment | * ORTE - the Open Run-Time Environment | ||

* OPAL - the Open Portable Access Layer | * OPAL - the Open Portable Access Layer | ||

| + | |||

| + | |||

| + | === Programming Models === | ||

| + | |||

| + | When we look at programming models we consider 2 basic ideas: | ||

| + | * Serial programming | ||

| + | * Message-Passing Parallel Programming | ||

| + | |||

| + | The message-passing model can be thought of as a process together with the program's own data and the parallelism is achieved by having each of these processes cooperate on the same task. This model also has some limitations these are: | ||

| + | |||

| + | * All variables are private to each process. | ||

| + | * All communication between each process by sending and receiving messages (hence the OpenMPI name). | ||

| + | * Most message-passing programs use the Single-Program-Multiple-Data (SPMD) model. | ||

| + | * It is possible to run an MPI-type program on one or more nodes, although if your program is only ever intended to run on one node you should consider openMP instead here. | ||

| + | |||

| + | Below is a data diagram of OpenMPI: | ||

| + | |||

| + | |||

| + | [[File:MPI-01.jpg]] | ||

| + | |||

| + | ====Communication modes==== | ||

| + | |||

| + | * Sending a message can either be synchronous or asynchronous. (eg. '''MPI_Ssend''' (Synchronous) and '''MPI_Bsend''' (Asynchronous)). | ||

| + | * Asynchronous send is not completed until the message has started to be received. | ||

| + | * An asynchronous send completes as soon as the message has gone. | ||

| + | * Receives are usually synchronous - the receiving process must wait until the message arrives. | ||

| + | |||

| + | ====Communication types==== | ||

| + | |||

| + | * ''Point to point'' - single point transfer call. | ||

| + | * ''Broadcast'' - all data is transmitted to all processes. | ||

| + | * ''Scatter/Gather data'' - parts of the data are sent to each process via a '''MPI_scatter''' call for processing. Then a '''MPI_gather''' call brings the data back to a root process. | ||

| + | * ''Reduction'' - Combine data from several processes to form a single result (ie. form a global sum, product, max, min, etc.). | ||

| + | |||

| + | ====Communication considerations==== | ||

| + | |||

| + | * Sends and receives calls must match. If these are not there is a danger of deadlock and your program may stall! | ||

| + | * Most programs do not need to be complicated and scientific codes have a simple structure which in turn has simple communication patterns. | ||

| + | * Use collective communication. | ||

| + | |||

| + | |||

| + | === Program Examples === | ||

| + | |||

| + | ==== C Example ==== | ||

| + | |||

| + | <pre style="background-color: #f5f5dc; color: black; font-family: monospace, sans-serif;"> | ||

| + | |||

| + | #include <mpi.h> | ||

| + | #include <stdio.h> | ||

| + | |||

| + | int main(int argc, char** argv) | ||

| + | { | ||

| + | int rank; | ||

| + | int buf; | ||

| + | MPI_Status status; | ||

| + | MPI_Init(&argc, &argv); | ||

| + | MPI_Comm_rank(MPI_COMM_WORLD, &rank); | ||

| + | |||

| + | if(rank == 0) | ||

| + | { | ||

| + | buf = 777; | ||

| + | MPI_Bcast(&buf, 1, MPI_INT, 0, MPI_COMM_WORLD); | ||

| + | } | ||

| + | else | ||

| + | { | ||

| + | MPI_Bcast(&buf, 1, MPI_INT, 0, MPI_COMM_WORLD, &status); | ||

| + | printf("rank %d receiving received %d\n", rank, buf); | ||

| + | } | ||

| + | MPI_Finalize(); | ||

| + | return 0; | ||

| + | } | ||

| + | |||

| + | </pre> | ||

| + | |||

| + | ==== Fortran example ==== | ||

| + | |||

| + | <pre style="background-color: #f5f5dc; color: black; font-family: monospace, sans-serif;"> | ||

| + | |||

| + | program hello | ||

| + | include 'mpif.h' | ||

| + | integer rank, size, ierror, tag, status(MPI_STATUS_SIZE) | ||

| + | |||

| + | call MPI_INIT(ierror) | ||

| + | call MPI_COMM_SIZE(MPI_COMM_WORLD, size, ierror) | ||

| + | call MPI_COMM_RANK(MPI_COMM_WORLD, rank, ierror) | ||

| + | print*, 'node', rank, ': Hello world' | ||

| + | call MPI_FINALIZE(ierror) | ||

| + | end | ||

| + | |||

| + | </pre> | ||

| + | |||

| + | ==== Python example ==== | ||

| + | |||

| + | <pre style="background-color: #f5f5dc; color: black; font-family: monospace, sans-serif;"> | ||

| + | |||

| + | #!/usr/bin/env python | ||

| + | |||

| + | from mpi4py import MPI | ||

| + | |||

| + | comm = MPI.COMM_WORLD | ||

| + | rank = comm.Get_rank() | ||

| + | |||

| + | if rank == 0: | ||

| + | data = {'key1' : [7, 2.72, 2+3j], | ||

| + | 'key2' : ( 'abc', 'xyz')} | ||

| + | else: | ||

| + | data = None | ||

| + | |||

| + | data = comm.bcast(data, root=0) | ||

| + | |||

| + | if rank != 0: | ||

| + | print ("data is %s and %d" % (data,rank)) | ||

| + | else: | ||

| + | print ("I am master\n") | ||

| + | |||

| + | </pre> | ||

| + | |||

| + | === Modules Available === | ||

| + | |||

| + | The following modules are available for OpenMPI: | ||

| + | |||

| + | * module add gcc/8.2.0 (GNU compiler) | ||

| + | * module add intel/2018 (Intel compiler) | ||

| + | |||

| + | * module add openmpi/3.0.0/gcc-8.2.0 | ||

| + | * module add intel/mpi/64/2018 | ||

| + | |||

| + | |||

| + | === Compilation === | ||

| + | |||

| + | ==== C ==== | ||

| + | |||

| + | <pre style="background-color: black; color: white; border: 2px solid black; font-family: monospace, sans-serif;"> | ||

| + | |||

| + | [username@login01 ~]$ module add gcc/8.2.0 | ||

| + | [username@login01 ~]$ module add openmpi/3.0.0/gcc-8.2.0 | ||

| + | [username@login01 ~]$ gcc -o testMPI testMPI.c | ||

| + | |||

| + | </pre> | ||

| + | |||

| + | ==== Fortran ==== | ||

| + | |||

| + | <pre style="background-color: black; color: white; border: 2px solid black; font-family: monospace, sans-serif;"> | ||

| + | |||

| + | [username@login01 ~]$ module add gcc/8.2.0 | ||

| + | [username@login01 ~]$ module add openmpi/3.0.0/gcc-8.2.0 | ||

| + | [username@login01 ~]$ mpifort -o testMPI testMPI.f03 | ||

| + | |||

| + | </pre> | ||

| + | |||

| + | '''Note''': mpifort is a new name for the Fortran wrapper compiler that debuted in Open MPI v3.0.0 | ||

| + | |||

| + | |||

== Usage Examples == | == Usage Examples == | ||

| Line 15: | Line 168: | ||

=== Batch Submission === | === Batch Submission === | ||

| − | <pre style="background-color: #C8C8C8; color: black; border: 2px solid | + | |

| + | <pre style="background-color: #C8C8C8; color: black; border: 2px solid #C8C8C8; font-family: monospace, sans-serif;"> | ||

| + | |||

#!/bin/bash | #!/bin/bash | ||

#SBATCH -J MPI-testXX | #SBATCH -J MPI-testXX | ||

#SBATCH -N 10 | #SBATCH -N 10 | ||

#SBATCH --ntasks-per-node 28 | #SBATCH --ntasks-per-node 28 | ||

| − | |||

#SBATCH -o %N.%j.%a.out | #SBATCH -o %N.%j.%a.out | ||

#SBATCH -e %N.%j.%a.err | #SBATCH -e %N.%j.%a.err | ||

#SBATCH -p compute | #SBATCH -p compute | ||

#SBATCH --exclusive | #SBATCH --exclusive | ||

| + | #SBATCH --mail-user= your email address here | ||

echo $SLURM_JOB_NODELIST | echo $SLURM_JOB_NODELIST | ||

module purge | module purge | ||

| − | module | + | module add gcc/8.2.0 |

| − | module | + | module add openmpi/3.0.0/gcc-8.2.0 |

export I_MPI_DEBUG=5 | export I_MPI_DEBUG=5 | ||

| Line 41: | Line 196: | ||

</pre> | </pre> | ||

| − | <pre style="background-color: | + | <pre style="background-color: black; color: white; border: 2px solid black; font-family: monospace, sans-serif;"> |

[username@login01 ~]$ sbatch MPI-demo.job | [username@login01 ~]$ sbatch MPI-demo.job | ||

Submitted batch job 289523 | Submitted batch job 289523 | ||

</pre> | </pre> | ||

| + | |||

| + | == Next Steps == | ||

| + | |||

| + | * [http://mpitutorial.com/tutorials/ http://mpitutorial.com/tutorials/] | ||

| + | * [[applications/OpenMPI|OpenMPI (Wiki)]] | ||

| + | * [https://en.wikipedia.org/wiki/Open_MPI https://en.wikipedia.org/wiki/Open_MPI] | ||

| + | * [https://www.open-mpi.org/ https://www.open-mpi.org/] | ||

| + | * [[programming/C|C Programming]] | ||

| + | * [[programming/C-Plusplus|C++ Programming]] | ||

| + | * [[programming/Fortran|Fortran Programming]] | ||

| + | * [[programming/Python|Python Programming]] | ||

| + | |||

| + | |||

| + | |||

| + | [[Applications/OpenMPI| Back to OpenMPI Application Page]] / [[Main Page]] / [[FurtherTopics/FurtherTopics #Modules| Further Topics]] | ||

Latest revision as of 15:22, 23 August 2023

Programming Details

MPI defines not only point-to-point communication (e.g., send and receive), but also defines other communication patterns, such as collective communication. Collective operations are where multiple processes are involved in single communication action. Reliable broadcast, for example, is where one process has a message at the beginning of the operation, and at the end of the operation, all processes in a group have the message.

Message-passing performance and resource utilization are the king and queen of high-performance computing. Open MPI was explicitly designed in such a way that it could operate at the very bleeding edge of high performance: incredibly low latencies for sending short messages, extremely high short message injection rates on supported networks, fast ramp-ups to maximum bandwidth for large messages, etc.

The Open MPI code has 3 major code modules:

- OMPI - MPI code

- ORTE - the Open Run-Time Environment

- OPAL - the Open Portable Access Layer

Programming Models

When we look at programming models we consider 2 basic ideas:

- Serial programming

- Message-Passing Parallel Programming

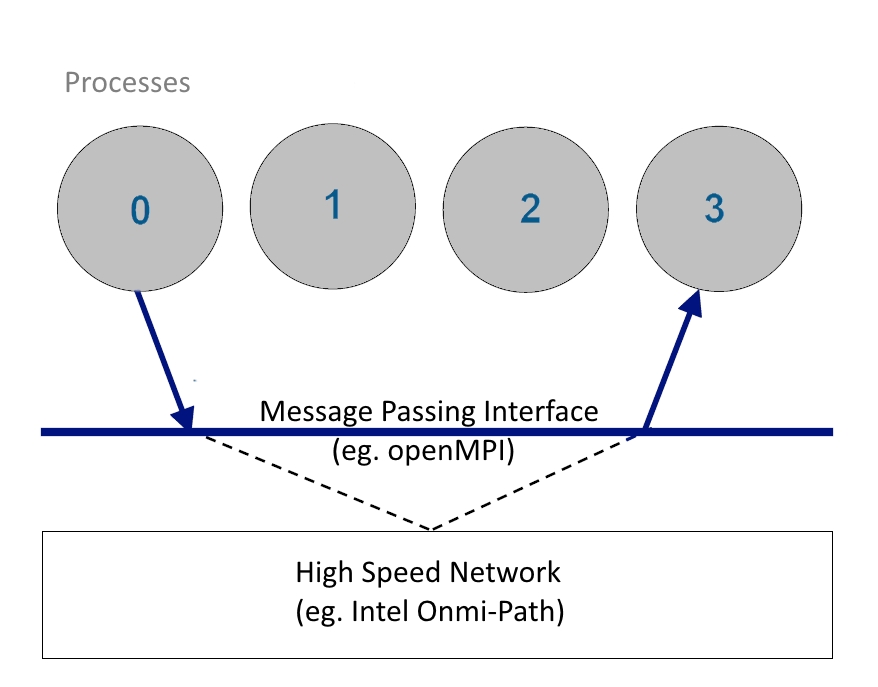

The message-passing model can be thought of as a process together with the program's own data and the parallelism is achieved by having each of these processes cooperate on the same task. This model also has some limitations these are:

- All variables are private to each process.

- All communication between each process by sending and receiving messages (hence the OpenMPI name).

- Most message-passing programs use the Single-Program-Multiple-Data (SPMD) model.

- It is possible to run an MPI-type program on one or more nodes, although if your program is only ever intended to run on one node you should consider openMP instead here.

Below is a data diagram of OpenMPI:

Communication modes

- Sending a message can either be synchronous or asynchronous. (eg. MPI_Ssend (Synchronous) and MPI_Bsend (Asynchronous)).

- Asynchronous send is not completed until the message has started to be received.

- An asynchronous send completes as soon as the message has gone.

- Receives are usually synchronous - the receiving process must wait until the message arrives.

Communication types

- Point to point - single point transfer call.

- Broadcast - all data is transmitted to all processes.

- Scatter/Gather data - parts of the data are sent to each process via a MPI_scatter call for processing. Then a MPI_gather call brings the data back to a root process.

- Reduction - Combine data from several processes to form a single result (ie. form a global sum, product, max, min, etc.).

Communication considerations

- Sends and receives calls must match. If these are not there is a danger of deadlock and your program may stall!

- Most programs do not need to be complicated and scientific codes have a simple structure which in turn has simple communication patterns.

- Use collective communication.

Program Examples

C Example

#include <mpi.h>

#include <stdio.h>

int main(int argc, char** argv)

{

int rank;

int buf;

MPI_Status status;

MPI_Init(&argc, &argv);

MPI_Comm_rank(MPI_COMM_WORLD, &rank);

if(rank == 0)

{

buf = 777;

MPI_Bcast(&buf, 1, MPI_INT, 0, MPI_COMM_WORLD);

}

else

{

MPI_Bcast(&buf, 1, MPI_INT, 0, MPI_COMM_WORLD, &status);

printf("rank %d receiving received %d\n", rank, buf);

}

MPI_Finalize();

return 0;

}

Fortran example

program hello include 'mpif.h' integer rank, size, ierror, tag, status(MPI_STATUS_SIZE) call MPI_INIT(ierror) call MPI_COMM_SIZE(MPI_COMM_WORLD, size, ierror) call MPI_COMM_RANK(MPI_COMM_WORLD, rank, ierror) print*, 'node', rank, ': Hello world' call MPI_FINALIZE(ierror) end

Python example

#!/usr/bin/env python

from mpi4py import MPI

comm = MPI.COMM_WORLD

rank = comm.Get_rank()

if rank == 0:

data = {'key1' : [7, 2.72, 2+3j],

'key2' : ( 'abc', 'xyz')}

else:

data = None

data = comm.bcast(data, root=0)

if rank != 0:

print ("data is %s and %d" % (data,rank))

else:

print ("I am master\n")

Modules Available

The following modules are available for OpenMPI:

- module add gcc/8.2.0 (GNU compiler)

- module add intel/2018 (Intel compiler)

- module add openmpi/3.0.0/gcc-8.2.0

- module add intel/mpi/64/2018

Compilation

C

[username@login01 ~]$ module add gcc/8.2.0 [username@login01 ~]$ module add openmpi/3.0.0/gcc-8.2.0 [username@login01 ~]$ gcc -o testMPI testMPI.c

Fortran

[username@login01 ~]$ module add gcc/8.2.0 [username@login01 ~]$ module add openmpi/3.0.0/gcc-8.2.0 [username@login01 ~]$ mpifort -o testMPI testMPI.f03

Note: mpifort is a new name for the Fortran wrapper compiler that debuted in Open MPI v3.0.0

Usage Examples

Batch Submission

#!/bin/bash #SBATCH -J MPI-testXX #SBATCH -N 10 #SBATCH --ntasks-per-node 28 #SBATCH -o %N.%j.%a.out #SBATCH -e %N.%j.%a.err #SBATCH -p compute #SBATCH --exclusive #SBATCH --mail-user= your email address here echo $SLURM_JOB_NODELIST module purge module add gcc/8.2.0 module add openmpi/3.0.0/gcc-8.2.0 export I_MPI_DEBUG=5 export I_MPI_FABRICS=shm:tmi export I_MPI_FALLBACK=no mpirun -mca pml cm -mca mtl psm2 /home/user/CODE_SAMPLES/OPENMPI/scatteravg 100

[username@login01 ~]$ sbatch MPI-demo.job Submitted batch job 289523

Next Steps

- http://mpitutorial.com/tutorials/

- OpenMPI (Wiki)

- https://en.wikipedia.org/wiki/Open_MPI

- https://www.open-mpi.org/

- C Programming

- C++ Programming

- Fortran Programming

- Python Programming

Back to OpenMPI Application Page / Main Page / Further Topics