Difference between revisions of "General/What is Viper"

m |

m |

||

| (15 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

== Introduction == | == Introduction == | ||

| − | Viper is the University of Hull's supercomputer and is located on site with its own dedicated team to administrate it and develop applications upon it. | + | Viper is the University of Hull's supercomputer and is located on-site with its own dedicated team to administrate it and develop applications upon it. |

A supercomputer is a computer with a high level of computing performance compared to a general-purpose computer. It achieves this level of performance by allowing the user to split a large job into smaller computation tasks which then run in parallel, or by running a single task with many different scenarios on the data across parallel processing units. | A supercomputer is a computer with a high level of computing performance compared to a general-purpose computer. It achieves this level of performance by allowing the user to split a large job into smaller computation tasks which then run in parallel, or by running a single task with many different scenarios on the data across parallel processing units. | ||

| − | The point of having a high performance computer is so that the individual nodes can work together to solve a problem larger than any one computer can easily solve. And, just like people, the nodes need to be able to talk to one another in order to work meaningfully together. Of course computers talk to each other over networks, and there | + | The point of having a high-performance computer is so that the individual nodes can work together to solve a problem larger than any one computer can easily solve. And, just like people, the nodes need to be able to talk to one another in order to work meaningfully together. Of course, computers talk to each other over networks, and there is a variety of computer network (or interconnect) options available for business clusters (see here for an overview of cluster interconnects). |

Supercomputers generally aim for the maximum in capability computing rather than capacity computing. Capability computing is typically thought of as using the maximum computing power to solve a single large problem in the shortest amount of time. Often a capability system is able to solve a problem of a size or complexity that no other computer can, e.g., ''a very complex weather simulation application''. | Supercomputers generally aim for the maximum in capability computing rather than capacity computing. Capability computing is typically thought of as using the maximum computing power to solve a single large problem in the shortest amount of time. Often a capability system is able to solve a problem of a size or complexity that no other computer can, e.g., ''a very complex weather simulation application''. | ||

| − | A high performance computer like Viper has a lot of elements of a desktop computer — processors, memory, disk, operating system — just more of them. High performance computers split off some of the tasks into separate elements to gain performance. An example of this on Viper is how it handles disk storage, which is a separate server with all of the storage directly connected to it. This is then connected across a | + | A high-performance computer like Viper has a lot of elements of a desktop computer — processors, memory, disk, operating system — just more of them. High-performance computers split off some of the tasks into separate elements to gain performance. An example of this on Viper is how it handles disk storage, which is a separate server with all of the storage directly connected to it. This is then connected across a high-speed network to all other individual computers (referred to as nodes). |

| − | Viper's real compute power is achieved by having a large amount of standard compute nodes ('''Compute'''), in our case 180 of them. Each of those nodes | + | Viper's real compute power is achieved by having a large amount of standard compute nodes ('''Compute'''), in our case 180 of them. Each of those nodes has 28 processing cores, so a total of 5040 processing cores ( i.e. 180 x 28 cores ). Some specialised nodes are included which are more suited to dedicated applications, such as high memory ('''Highmem''') nodes and GPU accelerator ('''GPU''') nodes which are particularly suited to large memory modelling and machine learning respectively. |

| − | The uses of supercomputers are | + | The uses of supercomputers are widespread and continue to be used in new and novel ways every day. |

Viper is used for | Viper is used for | ||

* Astrophysics | * Astrophysics | ||

| − | * | + | * BioEngineering |

* Business School | * Business School | ||

* Chemistry | * Chemistry | ||

| Line 24: | Line 24: | ||

* Computation Linguistics | * Computation Linguistics | ||

* Geography | * Geography | ||

| − | * | + | * And many more |

Like just about all other supercomputers Viper runs on [http://linux.co.uk Linux], which is similar to UNIX in many ways and has a wide body of software to support it. | Like just about all other supercomputers Viper runs on [http://linux.co.uk Linux], which is similar to UNIX in many ways and has a wide body of software to support it. | ||

| + | |||

| + | * [https://viper.hull.ac.uk/ Apply for an account] | ||

| + | * [[General/Gallery|What does Viper look like?]] | ||

== Using Viper == | == Using Viper == | ||

| − | As a user when you | + | As a user when you log in to Viper you will log into one of the two login nodes which will provide a Linux-style command-line interface. From here it is possible to load modules (i.e. software), download data, compile code and submit jobs to the cluster. Note that the login node is not the computing cluster, this is accessed by submitting a job to the scheduler (SLURM). |

| − | === Module | + | === Module Add === |

This allows you to load up the environment for the software you require which could be a specific compiler or an application like [[applications/Ansys|Ansys]] or [[applications/Matlab|Matlab]]. For more information see the [[general/Modules|application module guide]]. | This allows you to load up the environment for the software you require which could be a specific compiler or an application like [[applications/Ansys|Ansys]] or [[applications/Matlab|Matlab]]. For more information see the [[general/Modules|application module guide]]. | ||

| Line 40: | Line 43: | ||

=== Batch Script === | === Batch Script === | ||

| − | This is a script which describes the parameters needed for the scheduler to submit | + | This is a script which describes the parameters needed for the scheduler to submit your job to the compute cluster. For more information see [[general/Batch|batch jobs]]. |

=== Batch Submission === | === Batch Submission === | ||

| Line 48: | Line 51: | ||

=== Interactive Sessions === | === Interactive Sessions === | ||

| − | This is a slightly different way of using Viper and uses the power of the compute cluster with a user interactive session. For more information see [[General/Interactive|interactive session]]. | + | This is a slightly different way of using Viper and uses the power of the compute cluster with a user-interactive session. For more information see [[General/Interactive|interactive session]]. |

== Queues available == | == Queues available == | ||

| − | There are as | + | There are as previously mentioned 3 main queues that jobs are to be sent to: |

* compute - this queue will submit jobs to the 180 compute nodes | * compute - this queue will submit jobs to the 180 compute nodes | ||

| − | * | + | * Highmem - this queue will submit jobs to the High memory nodes |

* GPU - this queue will submit jobs to the GPU accelerator nodes | * GPU - this queue will submit jobs to the GPU accelerator nodes | ||

| − | + | Viper's job scheduler manages these queues, for more information about [[general/Slurm|SLURM]]. | |

== Viper Specifications == | == Viper Specifications == | ||

| Line 65: | Line 68: | ||

=== Physical Hardware === | === Physical Hardware === | ||

| − | Viper is based | + | Viper is based on the Linux operating system and is composed of approximately 5,500 processing cores with the following specialised areas: |

* 180 compute nodes, each with 2x 14-core Intel Broadwell E5-2680v4 processors (2.4 –3.3 GHz), 128 GB DDR4 RAM | * 180 compute nodes, each with 2x 14-core Intel Broadwell E5-2680v4 processors (2.4 –3.3 GHz), 128 GB DDR4 RAM | ||

* 4 High memory nodes, each with 4x 10-core Intel Haswell E5-4620v3 processors (2.0 GHz), 1TB DDR4 RAM | * 4 High memory nodes, each with 4x 10-core Intel Haswell E5-4620v3 processors (2.0 GHz), 1TB DDR4 RAM | ||

| − | * 4 GPU nodes, each identical to compute nodes with the addition of | + | * 4 GPU nodes, each identical to compute nodes with the addition of an Nvidia Ampere A40 GPU per node |

* 2 Visualisations nodes with 2x Nvidia GTX 980TI | * 2 Visualisations nodes with 2x Nvidia GTX 980TI | ||

* Intel [http://www.intel.com/content/www/us/en/high-performance-computing-fabrics/omni-path-architecture-fabric-overview.html Omni-Path] interconnect (100 Gb/s node-switch and switch-switch) | * Intel [http://www.intel.com/content/www/us/en/high-performance-computing-fabrics/omni-path-architecture-fabric-overview.html Omni-Path] interconnect (100 Gb/s node-switch and switch-switch) | ||

* 500 TB parallel file system ([http://www.beegfs.com/ BeeGFS]) | * 500 TB parallel file system ([http://www.beegfs.com/ BeeGFS]) | ||

| + | |||

| + | The compute nodes ('''compute''' and highmem ) are '''stateless''', with the GPU and visualisation nodes being stateful. | ||

| + | |||

| + | * Note: the compute nodes that have no persistent operating system storage between boots (e.g. originates from hard drives being the persistent storage mechanism). Stateless nodes do not necessarily imply diskless, as ours have 128Gb SSD temporary space too. | ||

| Line 89: | Line 96: | ||

|- | |- | ||

| [[File:Node-compute.png]] | | [[File:Node-compute.png]] | ||

| − | | 180 | + | | '''180''' |

| These are the main processing cluster nodes, each has Intel Broadwell E5-2680v4 28x cores and 128GB of RAM | | These are the main processing cluster nodes, each has Intel Broadwell E5-2680v4 28x cores and 128GB of RAM | ||

|- | |- | ||

| [[File:Node-himem.jpg]] | | [[File:Node-himem.jpg]] | ||

| − | | 4 | + | | '''4''' |

| These are specialised high memory nodes, each has Intel Haswell E5-4620v3 40x cores and 1 TB of RAM | | These are specialised high memory nodes, each has Intel Haswell E5-4620v3 40x cores and 1 TB of RAM | ||

|- | |- | ||

| [[File:Node-visualisation.jpg]] | | [[File:Node-visualisation.jpg]] | ||

| − | | 4 | + | | '''4''' |

| − | | These are specialised accelerator nodes with | + | | These are specialised accelerator nodes with an Nvidia A40 per node and 128 GB of RAM |

|- | |- | ||

| [[File:Node-visualisation.jpg]] | | [[File:Node-visualisation.jpg]] | ||

| − | | 2 | + | | '''1''' |

| + | | This is a specialised accelerator node with 2 Nvidia P100 per node and 128 GB of RAM | ||

| + | |- | ||

| + | | [[File:Node-visualisation.jpg]] | ||

| + | | '''2''' | ||

| These are visualisation nodes that allow remote graphical viewing of data using Nvidia GeForce GTX 980 Ti. | | These are visualisation nodes that allow remote graphical viewing of data using Nvidia GeForce GTX 980 Ti. | ||

|- | |- | ||

| Line 107: | Line 118: | ||

| − | == | + | ==Next Steps== |

* [[general/Batch|Batch Jobs]] | * [[general/Batch|Batch Jobs]] | ||

| Line 114: | Line 125: | ||

* [[general/Slurm|Slurm how-to]] | * [[general/Slurm|Slurm how-to]] | ||

* [[general/Visualisation_Nodes|Guide to using Viper's visualisation nodes]] | * [[general/Visualisation_Nodes|Guide to using Viper's visualisation nodes]] | ||

| + | |||

| + | ==Navigation== | ||

| + | |||

| + | * [[Main_Page|Home]] | ||

| + | * [[Applications|Application support]] | ||

| + | * [[General|General]] * | ||

| + | * [[Programming|Programming support]] | ||

Latest revision as of 15:34, 8 November 2022

Contents

Introduction

Viper is the University of Hull's supercomputer and is located on-site with its own dedicated team to administrate it and develop applications upon it.

A supercomputer is a computer with a high level of computing performance compared to a general-purpose computer. It achieves this level of performance by allowing the user to split a large job into smaller computation tasks which then run in parallel, or by running a single task with many different scenarios on the data across parallel processing units.

The point of having a high-performance computer is so that the individual nodes can work together to solve a problem larger than any one computer can easily solve. And, just like people, the nodes need to be able to talk to one another in order to work meaningfully together. Of course, computers talk to each other over networks, and there is a variety of computer network (or interconnect) options available for business clusters (see here for an overview of cluster interconnects).

Supercomputers generally aim for the maximum in capability computing rather than capacity computing. Capability computing is typically thought of as using the maximum computing power to solve a single large problem in the shortest amount of time. Often a capability system is able to solve a problem of a size or complexity that no other computer can, e.g., a very complex weather simulation application.

A high-performance computer like Viper has a lot of elements of a desktop computer — processors, memory, disk, operating system — just more of them. High-performance computers split off some of the tasks into separate elements to gain performance. An example of this on Viper is how it handles disk storage, which is a separate server with all of the storage directly connected to it. This is then connected across a high-speed network to all other individual computers (referred to as nodes).

Viper's real compute power is achieved by having a large amount of standard compute nodes (Compute), in our case 180 of them. Each of those nodes has 28 processing cores, so a total of 5040 processing cores ( i.e. 180 x 28 cores ). Some specialised nodes are included which are more suited to dedicated applications, such as high memory (Highmem) nodes and GPU accelerator (GPU) nodes which are particularly suited to large memory modelling and machine learning respectively.

The uses of supercomputers are widespread and continue to be used in new and novel ways every day.

Viper is used for

- Astrophysics

- BioEngineering

- Business School

- Chemistry

- Computer Science

- Computation Linguistics

- Geography

- And many more

Like just about all other supercomputers Viper runs on Linux, which is similar to UNIX in many ways and has a wide body of software to support it.

Using Viper

As a user when you log in to Viper you will log into one of the two login nodes which will provide a Linux-style command-line interface. From here it is possible to load modules (i.e. software), download data, compile code and submit jobs to the cluster. Note that the login node is not the computing cluster, this is accessed by submitting a job to the scheduler (SLURM).

Module Add

This allows you to load up the environment for the software you require which could be a specific compiler or an application like Ansys or Matlab. For more information see the application module guide.

Batch Script

This is a script which describes the parameters needed for the scheduler to submit your job to the compute cluster. For more information see batch jobs.

Batch Submission

This is the submission of the batch script to the scheduler (SLURM), the scheduler will then allocate the resources specified to run your jobs. If there are insufficient resources at that time the scheduler will wait using a multi-factor algorithm. For more information see slurm how-to.

Interactive Sessions

This is a slightly different way of using Viper and uses the power of the compute cluster with a user-interactive session. For more information see interactive session.

Queues available

There are as previously mentioned 3 main queues that jobs are to be sent to:

- compute - this queue will submit jobs to the 180 compute nodes

- Highmem - this queue will submit jobs to the High memory nodes

- GPU - this queue will submit jobs to the GPU accelerator nodes

Viper's job scheduler manages these queues, for more information about SLURM.

Viper Specifications

Physical Hardware

Viper is based on the Linux operating system and is composed of approximately 5,500 processing cores with the following specialised areas:

- 180 compute nodes, each with 2x 14-core Intel Broadwell E5-2680v4 processors (2.4 –3.3 GHz), 128 GB DDR4 RAM

- 4 High memory nodes, each with 4x 10-core Intel Haswell E5-4620v3 processors (2.0 GHz), 1TB DDR4 RAM

- 4 GPU nodes, each identical to compute nodes with the addition of an Nvidia Ampere A40 GPU per node

- 2 Visualisations nodes with 2x Nvidia GTX 980TI

- Intel Omni-Path interconnect (100 Gb/s node-switch and switch-switch)

- 500 TB parallel file system (BeeGFS)

The compute nodes (compute and highmem ) are stateless, with the GPU and visualisation nodes being stateful.

- Note: the compute nodes that have no persistent operating system storage between boots (e.g. originates from hard drives being the persistent storage mechanism). Stateless nodes do not necessarily imply diskless, as ours have 128Gb SSD temporary space too.

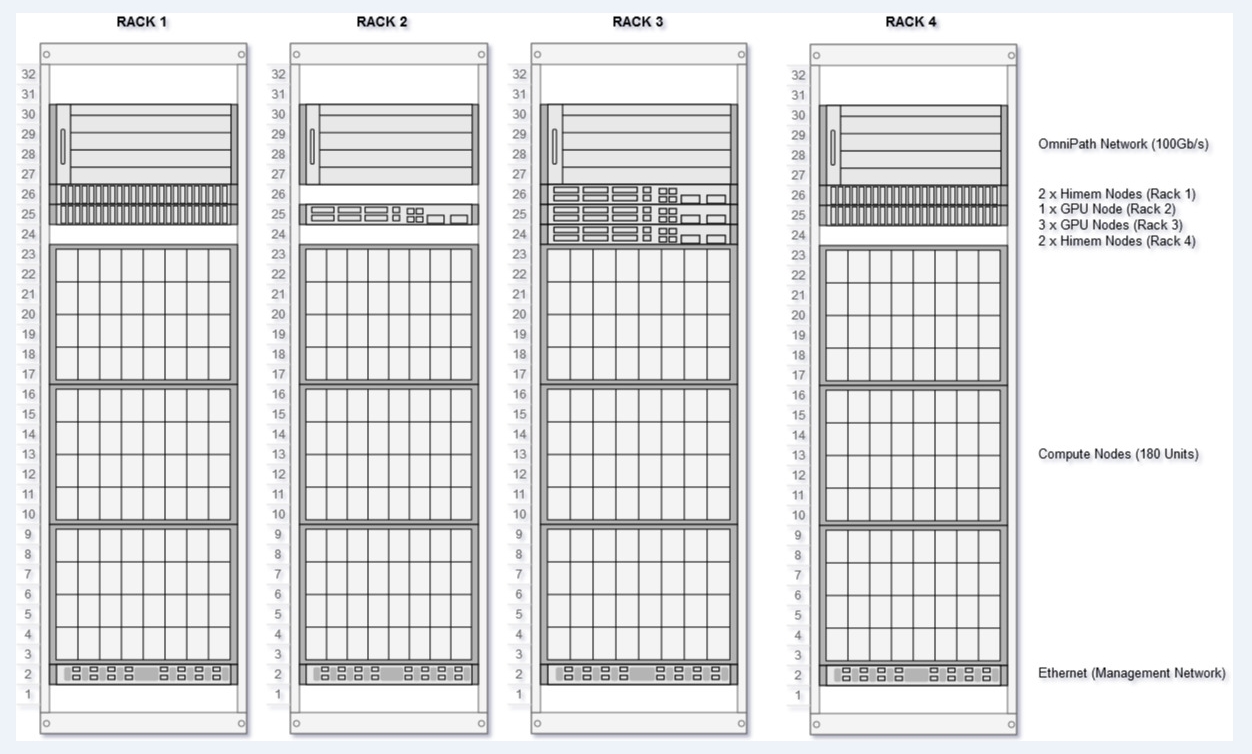

Infrastructure

- 4 racks with dedicated cooling and hot-aisle containment (see diagram below)

- Additional rack for storage and management components

- Dedicated, high-efficiency chiller on AS3 roof for cooling

- UPS and generator power failover

Next Steps

- Batch Jobs

- Viper Interactive session guide

- Application module guide

- Slurm how-to

- Guide to using Viper's visualisation nodes