Programming/OpenMP

Contents

Introduction to OpenMP

OpenMP (Open Multi-Processing) is an application programming interface (API) that supports multi-platform shared memory multiprocessing programming in C, C++, and Fortran on most platforms including our own HPC.

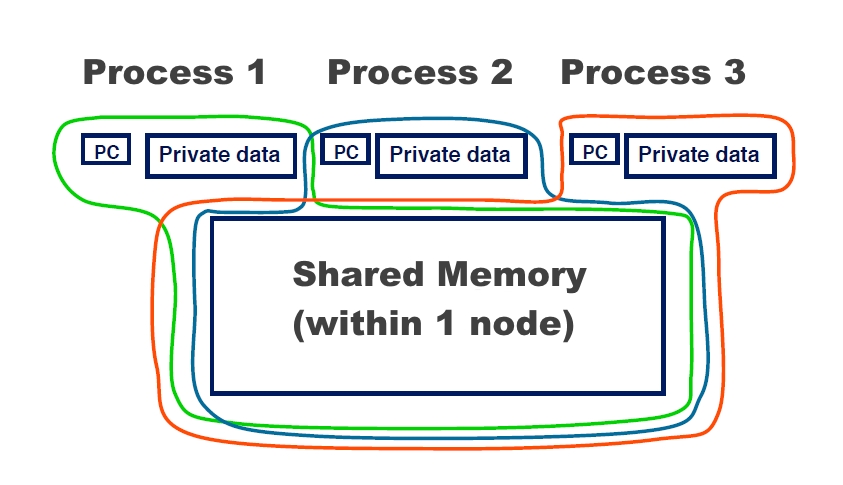

The programming model for shared memory is based on the notion of threads:

- Threads are like processes, except that threads can share memory with each other (as well as having private memory)

- Shared data can be accessed by all threads

- Private data can only be accessed by the owning thread

- Different threads can follow different flows of control through the same program

- each thread has its own program counter

- Usually run one thread per CPU/core

- but could be more

- can have hardware support for multiple threads per core

This programming model runs on one node but can be programmed to as a hybrid model as well with MPI.

An application built with the hybrid model of parallel programming can run on a computer cluster using both OpenMP and Message Passing Interface (MPI), such that OpenMP is used for parallelism within a (multi-core) node while MPI is used for parallelism between nodes. There have also been efforts to run OpenMP on software distributed shared memory systems.

Programming Details

OpenMP is designed for multi-processor/core, shared memory machines. The underlying architecture can be shared memory UMA or NUMA.

It is an Application Program Interface (API) that may be used to explicitly direct multi-threaded, shared memory parallelism. Comprised of three primary API components:

- Compiler Directives

- Runtime Library Routines

- Environment Variables

OpenMP compiler directives are used for various purposes:

- Spawning a parallel region

- Dividing blocks of code among threads

- Distributing loop iterations between threads

- Serializing sections of code

- Synchronization of work among threads

Programming Example

C Example

#include <stdio.h>

#include <stdlib.h>

#include <malloc.h>

/* compile with gcc -o test2 -fopenmp test2.c */

int main(int argc, char** argv)

{

int i = 0;

int size = 20;

int* a = (int*) calloc(size, sizeof(int));

int* b = (int*) calloc(size, sizeof(int));

int* c;

for ( i = 0; i < size; i++ )

{

a[i] = i;

b[i] = size-i;

printf("[BEFORE] At %d: a=%d, b=%d\n", i, a[i], b[i]);

}

#pragma omp parallel shared(a,b) private(c,i)

{

c = (int*) calloc(3, sizeof(int));

#pragma omp for

for ( i = 0; i < size; i++ )

{

c[0] = 5*a[i];

c[1] = 2*b[i];

c[2] = -2*i;

a[i] = c[0]+c[1]+c[2];

c[0] = 4*a[i];

c[1] = -1*b[i];

c[2] = i;

b[i] = c[0]+c[1]+c[2];

}

free(c);

}

for ( i = 0; i < size; i++ )

{

printf("[AFTER] At %d: a=%d, b=%d\n", i, a[i], b[i]);

}

}

Fortran Example

program omp_par_do

implicit none

integer, parameter :: n = 100

real, dimension(n) :: dat, result

integer :: i

!$OMP PARALLEL DO

do i = 1, n

result(i) = my_function(dat(i))

end do

!$OMP END PARALLEL DO

contains

function my_function(d) result(y)

real, intent(in) :: d

real :: y

! do something complex with data to calculate y

end function my_function

end program omp_par_do

Tips for programming

- Mistyping the sentinel (e.g. !OMP or #pragma opm ) typically raises no error message.

- Don’t forget that private variables are uninitialised on entry to parallel regions.

- If you have large private data structures, it is possible to run out of stack space.

- the size of thread stack apart from the master thread can be controlled by the OMP_STACKSIZE environment variable.

- Writing code that works without OpenMP too. The macro _OPENMP is defined if code is compiled with the OpenMP switch.

- Be aware The overhead of executing a parallel region is typically in the tens of microseconds range

- depends on compiler, hardware, no. of threads

- Tuning the chunk size for static or dynamic schedules can be tricky because the optimal chunk size can depend quite strongly on the number of threads.

- Make sure your timer actually does measure wall clock time. Do use omp_get_wtime()

Compilation

The program would be compiled in the following way, optional Intel compiler available too:

For C [username@login01 ~]$ module add gcc/4.9.3 [username@login01 ~]$ gcc -o test2 -fopenmp test2.c For Fortran [username@login01 ~]$ module add gcc/4.9.3 [username@login01 ~]$ gfortran -o test2 -fopenmp test2.c

Modules Available

The following modules are available for OpenMP:

- module add gcc/4.9.3 (GNU compiler)

- module add intel/compiler/64/2016.2.181 (Intel compiler)

| |

OpenMP is a library directive within the compiler and does not require any additional module to be loaded. |

Usage Examples

Batch example

#!/bin/bash #SBATCH -J openmpi-single-node #SBATCH -N 1 #SBATCH --ntasks-per-node 28 #SBATCH -o %N.%j.%a.out #SBATCH -e %N.%j.%a.err #SBATCH -p compute #SBATCH --exclusive echo $SLURM_JOB_NODELIST module purge module add gcc/4.9.3 export I_MPI_DEBUG=5 export I_MPI_FABRICS=shm:tmi export I_MPI_FALLBACK=no export OMP_NUM_THREADS=28 /home/user/CODE_SAMPLES/OPENMP/demo

[username@login01 ~]$ sbatch demo.job Submitted batch job 289552

Further Information

Specific

- https://en.wikipedia.org/wiki/OpenMP

- http://www.openmp.org/

- https://computing.llnl.gov/tutorials/openMP/

- http://fortranwiki.org/fortran/show/OpenMP

- 'Youtube - Intel Training videos'

General

- https://en.wikipedia.org/wiki/Open_MPI

- https://www.open-mpi.org/

- C Programming

- C++ Programming

- Fortran Programming

- Python Programming

| |